Shin Ultra Fight morphs 70s-style monster suits with real-time tools

In the West, the best-known example of a Japanese kaiju character is arguably Godzilla. However in Japan, there are many famous characters in the genre—including one with various super powers to rival the titanic might of the sea monster: Ultraman. Broadly analogous to Superman (in that references to the character are made throughout Japanese culture), Ultraman is one of the most prominent tokusatsu heroes.

Ultraman was the title of a Japanese television series broadcast between 1966 to 1967, and also the name of the superhero who vowed to protect justice and peace in the TV show. As soon as it began airing, Ultraman became hugely popular among children and adults in Japan, spawning a number of spin-offs known collectively as The Ultra Series.

One of these spin-offs was Ultra Fight, a low-cost production of five-minute long shorts from 1970. Children enthralled by Ultraman who had discovered a love of kaiju through the franchise became fascinated with Ultra Fight, leading to a revival in the popularity of the kaiju genre. Ultra Fight’s popularity rekindled the public’s interest in the Ultras and helped sway the decision to broadcast a new Ultraman series.

Initially, Ultra Fight was produced by reconstructing battle scenes taken from the original Ultraman series. Eventually however, episodes were produced using new shots, and it was these later episodes that led to the show’s cult-like popularity.

For the newly shot episodes, the production team put actors in monster character suits that had been used for previous live shows and events. Ultra Fight featured intense battles between kaijus on the beach, in the mountains, and on the streets in the suburbs. There were no special effects but these lo-fi kaijus duking it out undeniably had a unique charm.

Though it only ran for a year—1970 to 1971—the quirky, laid-back vibe of Ultra Fight led to the show winning a special place in the hearts of Ultraman fans.

Fifty years later, the concept has been revived. To support the release of the new Shin Ultraman film, Tsuburaya Productions resurrected Ultra Fight and hired Studio Bros to revamp the spin-off series for 2022.

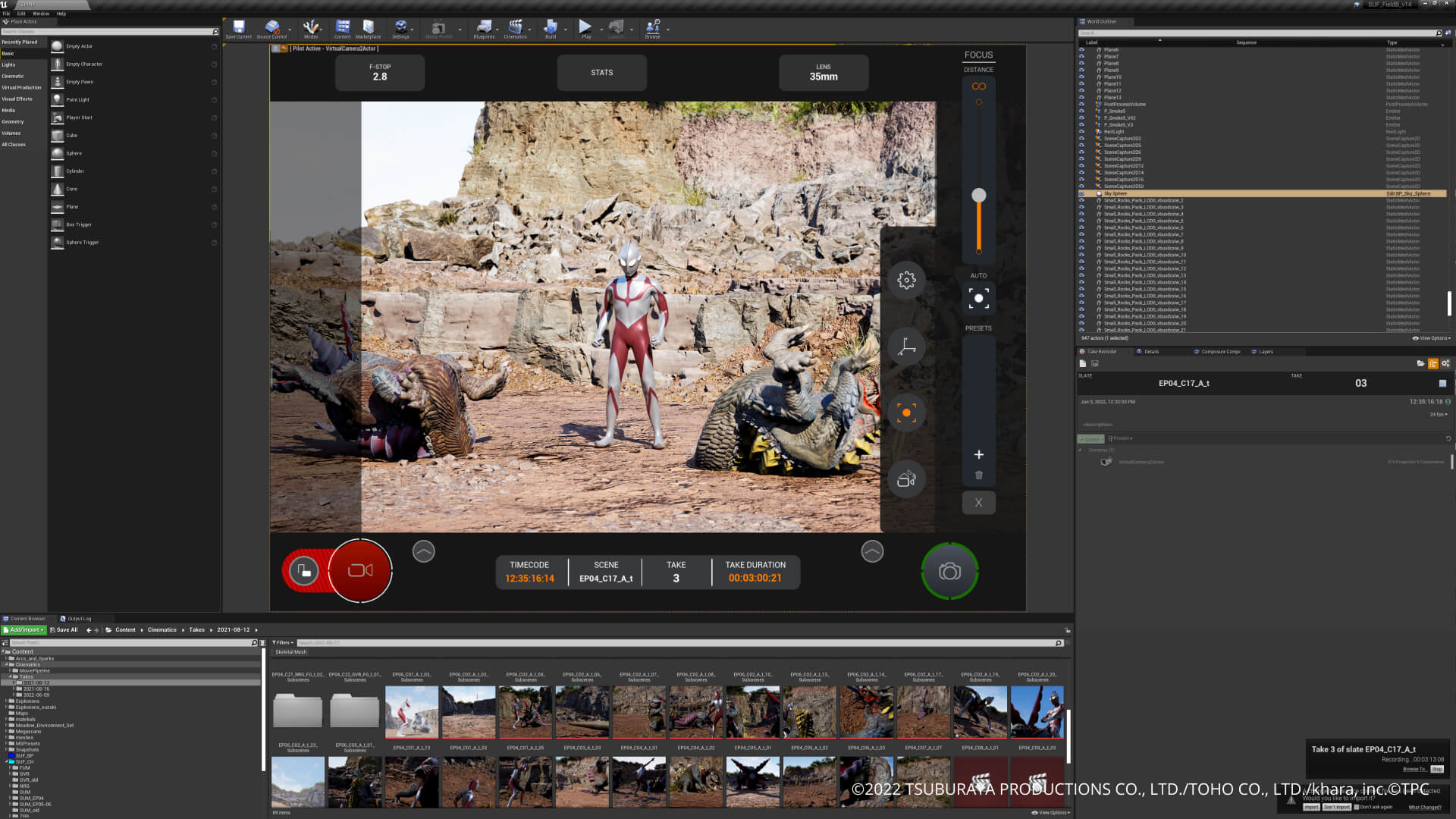

The result is Shin Ultra Fight. This time around however, the monster character suits would stay in the wardrobe—characters for the new version of the show were created using real-time technology.

For Studio Bros, the challenge was how to retain the charm and quirkiness of the original series, while bringing to bear the latest and greatest real-time technology for the visual effects.

Unreal Engine for film production

Mototaka Kaneko is the founder and president of Studio Bros. He’s been in the media and entertainment industry for over thirty years, starting out as a project manager on films before moving into roles at theme park attractions and on TV production.

The studio he founded focuses on real-time CG content creation across a variety of industries, from film to automotive, education, and beyond. “When I was a student, I used to program ray-traced renderers,” says Kaneko. “I feel as if I’m back to CG after 30 years.”

The studio’s video production pipeline has been focused around Autodesk Maya and Unreal Engine since 2014. “It’s been nine years now,” reflects Kaneko. “I guess we are one of the early adopters that have continuously used Unreal Engine to create video content.”

Kaneko says that the premise—and the biggest challenge—of Shin Ultra Fight is to take the characters featured in the new film Shin Ultraman, which have been created using the latest technology, and fuse that with the unique style and atmosphere that gives the original Ultra Fight its retro charm.

One of the key elements of the original series that contributes to that charm is the quirky movement of the characters.

The original Ultra Fight monsters moved in a very flexible way and quite differently from those of the franchise’s TV series. Kaneko explains that the character suits which appeared in Ultra Fight had been often used for attractions such as live kaiju shows. That meant that unlike the costumes used for film production, their weight was relatively light and provided a wide range of motion and ease of movement. This allowed for the idiosyncratic choreography you see in the show.

“Our first idea was to use the highly accurate character models that had been created for the movie Shin Ultraman so that we could reproduce the humorous movement shown in Ultra Fight,” says Kaneko.

It took around two months to build five rigs to achieve this. The team aimed to complete as much of the production process as possible in Unreal Engine, while creating some of the character modeling, animation, background, and effects using DCC tools. They used MotionBuilder for character animation, Houdini for some of the effects, and Maya to create control rigs.

Real-time rendering for faster production

Unreal Engine’s Live Link, Take Recorder, and Virtual Camera did a lot of the heavy lifting on the project. “With UE 4.26, we were able to use the vanilla functionality of Virtual Camera without having to modify it on the user side, which made it possible to greatly reduce the cost of the production time,” says Kaneko.

For most of the cuts in the episodes that Studio Bros undertook, they used camera data created with Virtual Camera. “This made the camera data creation process more efficient, so that we could record an average of two episodes per day,” says Kaneko.

After recording with Virtual Camera, Director Shinji Higuchi was able to review the movie at close to final-pixel quality, in real time. If anything needed to be re-recorded, the team could immediately shoot again. “With Unreal Engine, one of the biggest advantages is that all the processes are faster,” explains Kaneko.

Combined with the actors’ motion-captured performances, this Virtual Camera workflow provided the team with the ability to achieve the type of dynamic camera work usually associated with a live-action film—directly in Unreal Engine. They used the engine’s built-in real-time compositing feature Composure to create visual effects such as explosions and sparks, and shooting techniques like cross-dissolve.

Building CG backgrounds in a game engine

If you talk to people about visual effects, they generally think of fire-breathing dragons or futuristic spaceships. However, CG is increasingly used in the subtle art of invisible effects: creating realistic background scenery that is indistinguishable from real life.

This approach was taken to create many of the backgrounds for Shin Ultra Fight, with the team using two tools from within Epic’s broad creation ecosystem: Quixel Megascans and RealityCapture. “We created two types of background assets,” says Kaneko. “One is a ”Megascans-based background asset, and the other is a photogrammetry-based asset created with RealityCapture. We used one or the other, depending on the episode.”

The team decided that the best short-term approach to creating realistic backgrounds would be to utilize photogrammetry data. “For one of the backgrounds, we captured data from the location of the original Ultra Fight, Shimoda Beach in Izu, to reproduce the background at that time with photogrammetry,” says Kaneko.

For the other background asset, the team used Megascans to reproduce a rock quarry scene that was often used in old TV shows with special effects.

A studio based around real-time tools

As somebody who has based their entire studio around real-time technology, Kaneko is understandably something of a real-time evangelist. “The biggest advantages of using a game engine are that the time required for rendering is much shorter than using traditional software, that effects can be implemented in real time, and that final rendering can be achieved with production quality,” he says.

“Because the turnaround in production becomes faster, using a game engine can bring overwhelming advantages if you need to complete a project in a short time, like on our project.”

For Studio Bros, combining the power of AWS Thinkbox Deadline with Unreal Engine super-charges the production process, saving both time and money. “We can now output about 200 seconds (about 6,000 frames) of a 4K/32-bit EXR file overnight,” says Kaneko. “We can work on tasks far faster than with traditional CPU renderers and less expensively and faster than GPU renderers.”

Source: UBSOFT

熱門頭條新聞

- Alibaba Sells Gao Xin Retail for HK$13.1 Billion

- “Paddington in Peru “, a Live-Action Animated Adventure Comedy Film

- Grand Prizes for “Beautiful Man” and “Memoir of a Snail” at Cinanima 2024

- FragPunk’s March 6th 2025 Release Date Revealed at The Game Awards

- Asfalia: Fear Debuts In January

- Nordic Game 2025: Good Things Ahead

- Battery Note+UNDERGROUNDED will release in 2025!

- Sugardew Island – Your Cozy Farm Shop Launches for PC and Consoles in March 2025!