Ten Oscar hopefuls vie for nominations at the Academy of Motion Picture Arts & Sciences’ annual Visual Effects Bake-Off.

2019 Academy VFX Bake-Off: Celebrating A Year of Excellence in Visual Effects

Each year, the Academy of Motion Picture Arts and Sciences brings the visual effects community together for its annual VFX Bake-Off, where visual effects artists from the 10 shortlisted films, competing for the five Oscar nominations, step onstage to share insights and anecdotes from their work. Held this past Sunday at the Samuel Goldwyn Theater in Beverly Hills, this year’s event saw a number of significant and welcome changes, including livestreaming, a new voting process, and a new presentation format designed to better inform voting members about the scope, complexity and artistry of the work being featured.

Thankfully, the trusty red light of shame was still standing tall off to the side of the lectern, switching on when allotted talk time was up. However, new this year is an online voting system, replacing the previous manual system that required attendance at the event in order to cast a vote. This year, the event was livestreamed to groups of Academy Visual Effects Branch members in San Francisco, London and New Zealand, with all votes due that following Monday. The event’s starting time was moved back earlier in the day to 1:00PM to accommodate the new audiences’ time zone differences.

Also, for the first time, presenters were able to share three-minute behind-the-scenes and before-and-after reels that highlighted the visual magic behind each film’s array of incredibly impressive visual effects. For the first time, voting members could actually watch how certain sequences were produced, including the work on invisible effects that many would never have guessed were even produced digitally. This was followed by the same standard 10-minute film highlight reel, which was followed by a new, longer onstage Q&A session led by VFX industry icons and reigning VFX Branch Governors Craig Barron, John Knoll and Richard Edlund.

Forgoing the usual lottery system, the 10 shortlisted films were presented in alphabetical order. Read the highlights from each presentation below, along with trailers, featurettes and VFX breakdowns:

Ant-Man and The Wasp (Walt Disney Studios)

VFX Supervisor: Stephane Ceretti

Ceretti led off the first of the day’s presentations by stressing the difficulty of the effects, as well as the short post schedule on the Marvel film. “With only six-months of post and 1,450 shots, the movie had its share of complexity, variety and intricate visual design.” He shared how one film highlight, an elaborately staged car chase through the streets of San Francisco, was actually filmed on the East Coast. Huge parts of the city were scanned, then used to re-plate scenes shot in Atlanta. “Seventy-five percent of the shots” were produced that way, he noted.

Though an action film by pedigree, Ant-Man and The Wasp is a comedy at heart, with the physicality of shifting character size as they move about the city often being featured for humorous effect. “There were many comedic moments in the film,” Ceretti described. “Working with Peyton [Reed, the film’s director], while we were doing previs based on the script, he said this film is a comedy. There were a lot of very human moments in the film as well, but we tried to find the visual gags.” Taking out bad guys with a giant Pez dispenser and a restaurant salt shaker, shrinking Dr. Hank Pym’s lab building down to the size of a small suitcase, Ant-Man using a flatbed truck like a toddler’s scooter, were all shown to demonstrate both the film’s humor and deft integration of characters, props and environments that were constantly shifting size.

Avengers: Infinity War (Walt Disney Studios)

VFX Supervisor: Dan DeLeeuw

All told, the Marvel production involved 14 visual effects houses that created 25 digital characters, 12 digital environments, and 2,622 visual effects shots in the final tally. It’s a shame that by focusing on the sheer magnitude and importance of Thanos, a digital character whose emotional performance, based on actor Josh Brolin, is pivotal to the story, DeLeeuw was only able to share insights on a handful of the film’s other visual effects highlights. But no doubt, Thanos is a tour-de-force character, that, according to DeLeeuw, pushes the boundaries of what a digital character could do and how, more importantly, it could act alongside live-action characters. “With close to an hour of screen time, it was crucial that Thanos embody as much as Josh Brolin’s performance as possible,” he shared. Noting Brolin’s importance to the characters as well as the film’s enormous success, DeLeeuw told the crowd, “And a special shout out to Josh Brolin, who let us do all our crazy things to him, get made fun of by Robert Downey Jr. and still give the performance of a lifetime.”

Other areas highlighted by DeLeeuw included actor Peter Dinklage’s role as Eitri, a 16-foot dwarf, photographed on a scale set and then composited into live-action photography, as well as the film’s dramatic ending, where actors had to suddenly transition to digital characters as their bodies turned to dust and floated away as Thanos destroyed half the universe. For the epic final battle in Wakanda, ILM built a massive environment consisting of digital canyons, savannahs, trees, to turn an Atlanta horse farm into Africa. Special effects created a 380-foot river that contained 350,000 gallons of water with a flow rate of 27,000 gallons per minute.

Black Panther (Walt Disney Studios)

VFX Supervisor: Geoffrey Baumann

Baumann framed his presentation around how stylized visual design was used to create the look and feel of a hidden Vibranium technology-based society. “The film was a collaboration of visual and special effects that allowed Black Panther to become a manifestation of art, science and African culture,” he explained.

Highlights of the 2,456 visual effects shots included creation of the city of Wakanda, the massive cityscape, majestic waterfalls and rivers, special fighting suits and the unique visual signature of harnessed Vibranium sound vibration technology. Panther and Killmonger suits instantly formed using nanite technology, where particles were emitted from a necklace wrapping around either warrior’s body. “A complex Houdini system created textures in the model to drive the formation of the suits as the clothing burned off and fell away,” Baumann noted. “Due to the complexities of the system, the simulations were generated on a character in a neutral pose and then run through a transform matrix to push the animation onto a character’s position within the shot.”

Baumann showed how cymatic patterns were used as a design guide for Panther’s energy release as well as Klaue’s sonic disrupter. In one key South Korean chase scene, Okoye and Nakia are riding in a car, that, when hit by the weapon, is blasted into piece. “The energy waves can disassemble cars to the extent they needed to be modeled inside and out, allowing the animators and effects artists the ability to art direct the sprawling debris,” he shared.

The Wakandan skyline merged designs inspired by African culture with modern architectural elements. According to Baumann, ILM built the city and surrounding hills with 57,000 individual buildings, 50 million trees and 20,000 Wakandan citizens in the streets and city parks. With no concrete. Everything was rooted in African design and colors. The studio had to create a propriety crowd system and reduce their memory footprint for rendering the architecture by optimizing materials into a master list that would represent the basis for their applied palette. For the Victoria Falls-inspired river and waterfalls, special effects installed pumps and plumbing that flowed 30,000 gallons of water a minute to feed a 30-foot waterfall and pool.

“In the story about the dramatic struggle of a king and his people, we weren’t asked to destroy worlds or create giant digital monsters,” Baumann concluded. “We were asked to create a coherent and culturally significant world for Ryan [Coogler, the film’s director] to tell his story in. We were asked to turn the stages of Atlanta into an African kingdom in which every effect, weapon or environment contributed to the fabric of history and technology reinforcing the stakes in this dramatic narrative.”

Christopher Robin (Walt Disney Studios)

VFX Supervisor: Christopher Lawrence

With no alien worlds, spaceships, or explosions of any kind, Christopher Robin was a unique entry on the day’s schedule, though its 1,374 visual effects shots required as much effort as any of the other nine featured films. Lawrence noted right up front that the “story hinges around people being enlightened by the belief that stuffed toys can walk and talk.” Because the film used a lot of handheld cameras often shooting on location in natural light, the visual effects needed to fit into Christopher Robin’s world invisibly, “so we could tread the fine line between imagination and reality.” Critical to the believability and emotion of the film was that these stuffed toys needed to feel like they were really there.

The main challenge was getting the animation to reflect how the articulation of a real stuffed toy would move. Director Marc Forster didn’t want to use standard classical animation techniques, so Lawrence’s animation team came up with a range of motion that was subtle but could be animated with enough contrast to produce feeling, believable performances. “There were lots of tricks we used to hide expression changes,” Lawrence added.

One funny clip showed puppeteers busily at work on set around a picnic table, manipulating various stuffed animal puppets. They helped frame the shots, which provided valuable lighting reference as well.

The CG build of lead character Winnie-the-Pooh was phenomenally detailed, matching his costume stitch for stitch. A volumetric shader was created to deal with the soft woolen jumpers. One challenge highlighted was how they simulated the toy’s hairs coming in contact with ponds, the rain, and leaf mulch being pushed around. Other elements shown included environments such as foggy woods, a fully digital train station, the fully CG interior of Pooh’s house and a steamer trunk bouncing down a London street after falling off the back of a truck.

First Man (Universal Pictures)

VFX Supervisor: Paul Lambert

To summarize Lambert’s presentation, First Man is a production that redefines the phrase “shooting in-camera.” The tragic Apollo 1 launch fire sequence was captured in-camera. Even the reflections in the astronauts’ visors and actors’ eyes were done in-camera. The production seamlessly integrated visual effects, special effects archival footage and miniature models to create the 1960s documentary style the director, Damien Chazelle, was looking for.

Various spacecrafts were shot in front of a 60-foot wide LED screen, on which fully rendered, 360-degree spherical images were played back. Actors were placed in front of it on 6-axis gimbals. “We were able to synch the gimble directly to the rotation of the content on the screen without any form of optical tracking,” Lambert explained.

A model of a Saturn 5 rocket at 1/30 scale was built as well as 1/6 scale models of the LM (Lunar Module) and CSM (Command Service Module). Models and CG were mixed and matched during closeup and wide shots. They were able to attach cameras to the miniatures, which helped capture the desired documentary look and feel. This also helped get across the idea of the fragility of the space crafts and trips, that these missions used cutting edge technology of the day. The models were built using 3D printers, with sequenced laser cutting to make the components, which were hand painted and assembled.

In addition, the special effects team built a full-scale Lunar Landing Training Vehicle. “We built a full-scale LLTV and hung it from computer-controlled cranes that fooled one of the NASA advisors we had in post into believing we had truly resurrected one of the original LLTVs,” Lambert noted with a wry smile.

The production was given complete access to NASA’s archives. Footage found and used was first restored to pristine condition, then degraded once again to conform to the look of the 16 and 35mm shoot photography. According to Lambert, “We used matte paintings, CG and additional effects elements,” the results of which were then combined with the rendered launch footage, reframed and played back on the LED screen for interior shots.

“At the end of the movie, we worked from full frame, 6K IMAX footage shot at 36 frames per second,” Lambert concluded. “We extended the utilization photography and had to clean up the visors which showed the camera and everything else on set.” Additionally, they added CG dust, a simulation for the moon’s gravity as well as cleanup of the calibrated bungee cords attached to the astronauts that helped them simulate walking on the moon.

Jurassic World (Universal Pictures)

VFX Supervisor David Vickery

Since when did only 1200 visual effect shots seem like such a paltry number? With 70 minutes of VFX content, however, Vickery’s teams generated a variety of stunning visuals that were positively “volcanic” in scope. In what seemed like the afternoon’s main cinematic theme, he described how one of their big challenges was capturing as much practically as possible. “The sheer scale of destruction on Isla Nublar meant a lot of our environments needed to be fully digital,” he noted. “But, it was still crucial to make the foundation of those shots be practical photography.”

According to Vickery, the most complex work was the eruption of Mount Sibo. “We modeled the digital events on the real eruption of Batu Tara in Indonesia, using Houdini to create detailed explosions, pyroclastic flows and lava projectiles.”

In one of the day’s most humorous recollections, Vickery shared the genesis of the underwater gyrosphere sequence. In a pre-production meeting, when discussing how they’d capture the actors as the gyrosphere flew off a cliff into the ocean, it was suggested going to a theme park like Disneyland. Comments were made on the difficulty of such a shoot – how could that be filmed, they’d have to shut down the ride, get health and safety involved, setup green screens, all to get footage that probably wouldn’t look that good. “Paul [Corbould, the film’s special effects supervisor] sat at the other end of the table. I could see him thinking. Then he said [meekly] ‘I could build you one.’ So, we engineered a 40-foot tall roller coaster to capture a truly genuine reaction” from the actors. “It generated some incredibly unflattering faces of fear in the actors,” Vickery added with a chuckle.

For the film, ILM created a new range of creature performance puppets to represent the dinosaurs on set. “The resulting shots benefitted from the tangible interactive energy in the camera, cast and direction that would have been missing if we had used a more conventional approach,” Vickery noted.

The film also made extensive use of animatronics for up-close dinosaur interactions, 1:1 scale models of dinosaurs that were 3D printed by Neal Scanlon’s team. For Vickery, it was critical to seamlessly intercut between digital and physical creations. While various dinosaur parts were replaced in post, the VFX team always stayed faithful to the original animatronic performance. “Our goal was to keep the audience guessing as to whether they were watching a digital effect or not,” he concluded.

Mary Poppins Returns (Walt Disney Studios)

VFX Supervisor: Matt Johnson

Johnson began his presentation by sharing that, “Creating the visual effects for Mary Poppins Returns was both an honor and a challenge. We needed our work to be relevant to the first iconic film, but also showcase a 21st century combination of practical effects, cutting edge CG and traditional hand-drawn artistry.” He went on to highlight several key areas of the production.

First, he described how the bathtub scene, where the actors are transported above and below a foam-covered sea, required extensive wire work, practical rigs, and full CG environments complete with moving coral and kelp, shoals and digital fish.

Next-he talked about how the film makes innovative use of hand-drawn 2D animation integrated with CG elements, along with a host of animal characters and live-action actors. The animation sequences harkens back to the original 1964 film, Mary Poppins, yet brings things up to date with great fluidity of staging, camera movement and lighting. “It was a literal ‘back to the drawing board’ blend of traditional hand-drawn character animation, live-action performance, sophisticated practical rigs and complex computer graphics,” Johnson explained. A fully 3D set was created allowing the director freedom to run scenes like a Broadway show, without requiring extensive re-working by the animators as he made changes to the staging. The set needed to feel hand-drawn while still shading correctly in the CG lights. “Hybrid models were developed that carried through the flat color look of the original artwork while giving controllable diffuse and specular responses to the digital lights,” he noted.

In the elaborate chase scene, much of the look was designed as an homage to Disney’s innovative multi-plane cameras. However, the number of layers needed and complexity of the lighting meant a CG solution was required. Johnson also mentioned that in the forest environment, many of the trees were built with artificially flat profiles to enhance the 2D feel and that ultimately, there were hundreds of layers involved.

One of the big challenges for the film was finding enough 2D animators. To lead the team, they chose Ken Duncan, of Duncan Studios, who “pulled out his Rolodex,” even getting people to come out of retirement, to work on the film. It was actually harder finding people for the ink and paint and cleanup departments than for the animation department. But even more difficult was the challenge of integrating the 2D characters into the 3D world. 2D was done by hand on paper and then scanned. VFX artists worked alongside the 2D animators in order to enhance their creative collaboration.

Johnson spoke briefly about recognizing the original work of Peter Ellenshaw from the first film – one of his original paintings is actually used in the new film’s credit sequence. He also spoke briefly about how wire work was key to the film, especially because the director, Rob Marshall, comes from a Broadway background. “We were basically making a Broadway show,” he said. “He didn’t want digital doubles. He wanted it to happen live on stage.” For both live-action and animated sequences, Marshall put all the characters where he wanted them in 3D space, and it was the VFX team’s job to execute, get the reflections, shadows and lights set properly.

Johnson concluded with the same sentiment he began with. ‘We started Mary Poppins Returnshoping to live up to our collective childhood memories and to make something special that would resonate today, with a team of artists around the world using everything from pencils and paper to computers and baling wire all in the pursuit of something truly magical.”

Ready Player One (Warner Bros. Pictures)

VFX Supervisor: Roger Guyett

According to Guyett, “The challenge on Ready Player One was to bring together the futuristic 2045 world of Ohio with the immersive VR world of the Oasis game, filled with every conceivable avatar and environment you could think of,” while always striving to match the inventiveness of Ernest Cline’s book. And packing the film with as much 1980s nostalgia as humanly possible.

Building the real world of Ohio involved environments, vehicles, digital pyrotechnics and effects work, plus practical special effects. Building the virtual world of the Oasis was an order of magnitude more difficult. “Designing and building the Oasis was extremely ambitious,” Guyett explained. “Thousands of character and props were modeled. Whether it was creating the Akira cycle, the Back to the Future DeLorean, anime characters like Gundam or any number of provisional characters or environments, every detail had to be accounted for.” All told, 63 environments were designed and built, ranging from sets featuring War of the Worlds and The Shining to Planet Doom. Scenes were constructed from huge quantities of mocap data and keyframe animation, which required ILM to upgrade their facial capture system to increase fidelity.

The film was a licensing nightmare. “Everyone wanted to see their character used in something fabulous,” Guyett noted. The sheer number of varied and one-off characters was enormous. “Over half a million characters storm the castle, and those are just the good guys,” he added. In keeping with the 1980s pop reference theme, the “epic” 3rd act battle included an “epic” brawl between the Iron Giant and Mechagodzilla.

In the film, one of the “challenges” our heroes attempt to conquer within the Oasis takes place in recreated sets from Stanley Kubrick’s The Shining. Guyett shared how it was director Steven Spielberg’s idea to use that film – he had visited Kubrick during the shoot. Guyett initially wanted to use the actual movie footage for a Forrest Gump approach. However, the more they tested, building a digital version of the famous bathroom scene, being able to reframe everything convinced them they needed to recreate everything. Except the woman in the bathtub, where they used original footage as she pulls back the shower curtain.

The production made extensive use of mocap. “With mocap, we had a huge virtual production pipeline,” Guyett describes. “Steven could shoot a lot of the shots, or at least rough them in, and then we could start seeing the sequences come together.” A lot of the wider epic shots were prevised for the details. The director would do a virtual camera pass with a lot of the shots, which they could edit with.

Solo: A Star Wars Story (Walt Disney Studios)

VFX Supervisor: Rob Bredow

Bredow and his VFX teams produced over 1,800 shots for the film, with the expressed goal of honoring the classic Star Wars with a 1970s look.

He described how every stunt was real in the exciting speeder chase, shot on location at factories and docks using 525 horsepower action vehicles. Next, he shared how a 40-ton rig that could hydraulically tilt onto its side in 3 seconds was built for the train heist sequence. “That thing was a beast,” Bredow noted with a grin. The live-action shoot, high in the Italian Alps, wasn’t just done for reference. The scene’s flight lines were carefully techvized around the real mountains. For shots they couldn’t get because of safety concerns, they photo-modeled hundreds of square miles of canyons, effectively creating 3D background plates with the lighting baked in.

One of the film’s most challenging visuals was a massive explosion at the conclusion of the heist. “We were looking for inspiration to try to deal with ‘a Star Wars explosion’… the script had that terrible line: an explosion like one we’ve never seen before,” Bredow lightheartedly explained. YouTube research led them to The Slow Mo Guys, who had done a unique explosion inside a fish tank in their backyard. Bredow’s team took it from there, placing a tiny underwater charge inside a fish tank, then shooting against a 3D printed mountain at 25,000 frames per second. The actual explosion photographed in the fish tank was used in the film.

Bredow described how they recreated the famous Sabacc game and that if you look around the table, you’ll see that the character of Six Eyes, who was done entirely practical, using a very sophisticated inertial-based rig.

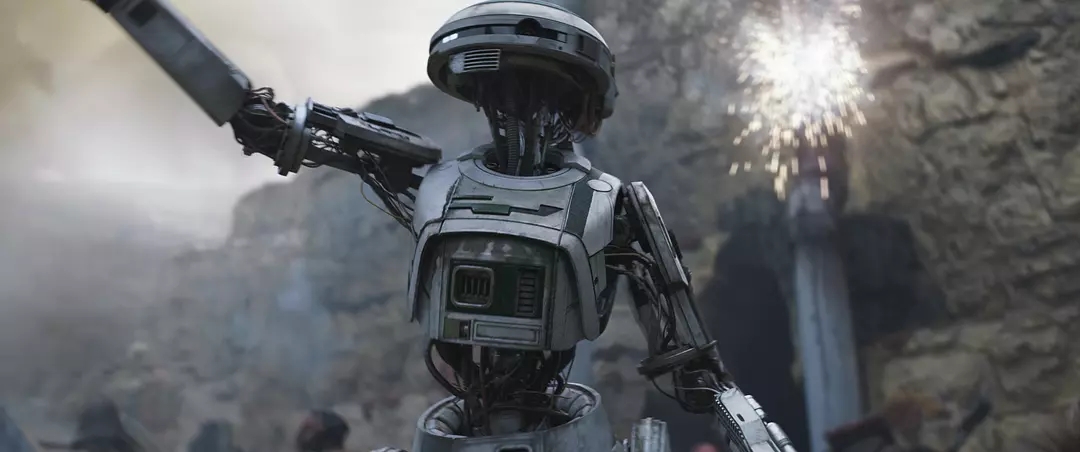

He went on to note that there were 120 creatures in the film, more than on any Star Wars film to date. Lando Calrissian’s droid partner, L3-37 was played by Phoebe Waller-Bridge, who wore a practical suit that was finished with a CG interior, which required precise animation and lookdev that was indistinguishable from the live costume. “It matched so closely I stopped trying to guess what was practical and what was digital because everyone could fool me,” Bredow mused.

Bredow concluded by sharing how they handled filming the Millennium Falcon, in particular, on the massive Kessel run sequence. They wanted the Falcon cockpit finals to be shot in-camera for the first time ever on a Star Wars film. So, they built a 180 degree wrap around rear projection screen and created over 20 minutes of final quality 8k footage to be viewed out the window of the ship. They provided director Ron Howard with an assortment of real-time on set dynamic controls so they could capture everything in-camera, down to the highlight of hyperspace in Solo’s eye. In addition, they lit the sequence from the rear projection screen, which gave a totally different look, and scene composition, than if they’d shot on greenscreen.

Bredow also shared an amusing story about how, in initial rehearsals for the scene, they didn’t tell the actors what would be happening. “We put the actors in the cockpit. At the first rehearsal, at the end of the sequence, they are just looking at a static starfield. Donald Glover and Phoebe Waller-Bridge, in the front seat, push the hyperspace lever. Right then, we shook the cockpit, played the hyperspace on the screen and the whole cast screamed, ‘No way, we’re in Star Wars! It’s real!’ After they finally calmed down, Donald Glover, who was leaning over and had his mic on, said, ‘This is the coolest thing I have ever done.’”

Welcome to Marwen (Universal Pictures)

VFX Supervisor: Kevin Baillie

Baillie began his presentation by addressing a common sentiment that I’m sure was going through the mind of many in attendance. Describing 46 minutes of the film that takes place within Mark Hogencamp’s unique imagination, where dolls come to life, he stated that there was a huge amount of visual character work that had to steer clear of the uncanny valley. “Director Bob Zemeckis told me he didn’t want to take any crap for mocap dead eyes and I was on the hook for figuring it out.” A fairly formidable challenge, considering the fate of the entire film rested on a successful resolution to that very issue.

To meet the challenge, Baillie’s team started with live-action tests that, to his dismay, looked horrible. “We actually shot a live-action test of Steve Carrell in full costume, totally lit,” he described. “Our original idea was to augment him with digital doll joints and slim him like Skinny Steve in Captain America. But, it just ended up looking like Steve Carrell in a high-end Halloween costume and something was wrong with him.” From there, however, they did further tests with the captured performance, using what they called a “ski mask,” putting eyes and a mouth on a digital body. After a little tweaking to get it to not look like Annoying Orange, they were able to make it look like a seamlessly integrated doll.

According to Baillie, “We mo-capped digital doubles as our canvas and projected beauty-lit footage of the actors’ eyes and mouths for the facial performances. It was like both a mocap show and live-action.” He then shared how lighting and filming a featureless mocap stage like a finished movie took serious planning. They built a real-time version of the set to allow the DP to pre-light all the mocap scenes in prep. During the shoot, virtual production allowed real-time lighting and camera decisions. “We pre-lit the whole thing using a real-time version of Marwen in Unreal,” he noted. “We matched that every day on set with the physical lighting. When we moved a physical light, we would move it in the Unreal view. We had one monitor where you could see what our Alexa 65s that were capturing the footage were seeing, and another one that showed the real-time version of the world. That’s how important getting that lighting was.”

Two hand-crafted versions of Marwen were used on the film — one used for on-location work and one used as a stage. They were scanned and turned into obsessively detailed digital Marwens.

In the end, 17 actors were 3D scanned and digitally sculpted into their doll alter-egos. Those sculpts were then turned into working dolls at Creative Consultants. They were 3D printed, molded, cast and hand-painted. In post, a multi-set re-projection process they invented fused live-action footage with underlying renders of hand keyframed faces. They then plasticized the result to integrate the two. They also rendered all the depth of field in-camera and used digital versions of optical tools like tilt-shift lenses and split diopters to keep the actors in focus.

熱門頭條新聞

- FMX 2025: A Treasure Trove of Creativity

- Ubisoft’s $4 billion carveout sells Tencent a 25 percent stake in some of its biggest games

- BIG HUGE GAMES CELEBRATES 10 YEAR ANNIVERSARY OF ITS MOBILE STRATEGY GAME DOMINATIONS

- Nintendo Switch 2 launches on June 5

- Sony’s Soneium teams with Animoca Brands blockchain for anime digital IDs

- XP Game Summit 2025

- ARENA BREAKOUT: INFINITE RELEASING APRIL 29

- Blizzard’s Game Director joins NG25 Spring