Dinosaurs in the multiverse: Why DinoPowers uses real-time animation

Rick & Morty, Spider-Man: No Way Home, Dr. Strange—multiverses are kind of having a moment right now. And it’s easy to see why. The idea of different worlds, timelines, and creatures colliding is just too good to pass up.

In Dofala Animation’s world, that means arming four young heroes with robot dinosaurs so they can take on villains from a parallel realm. This mix of action and multiverse instantly made DinoPowers a hit when it launched on South Korean’s KBS TV channel, intriguing kids (and likely some adults) with its fast-paced animation style.

But a good story and killer animation aren’t the only things DinoPowers has going for it. It’s also part of an emerging trend that has seen studios around the world embracing real-time animation for its speed and fidelity. In fact, that was one of the main things that drew Dofala to it.

Internally, the team was tired of all the “strenuous” offline rendering that was eating up hours and reducing iterative cycles. After discovering Unreal Engine during a VR project, they thought that they might be able to hit their twin goals of excellent visuals and efficient rendering in the same platform. So they gave it a go.

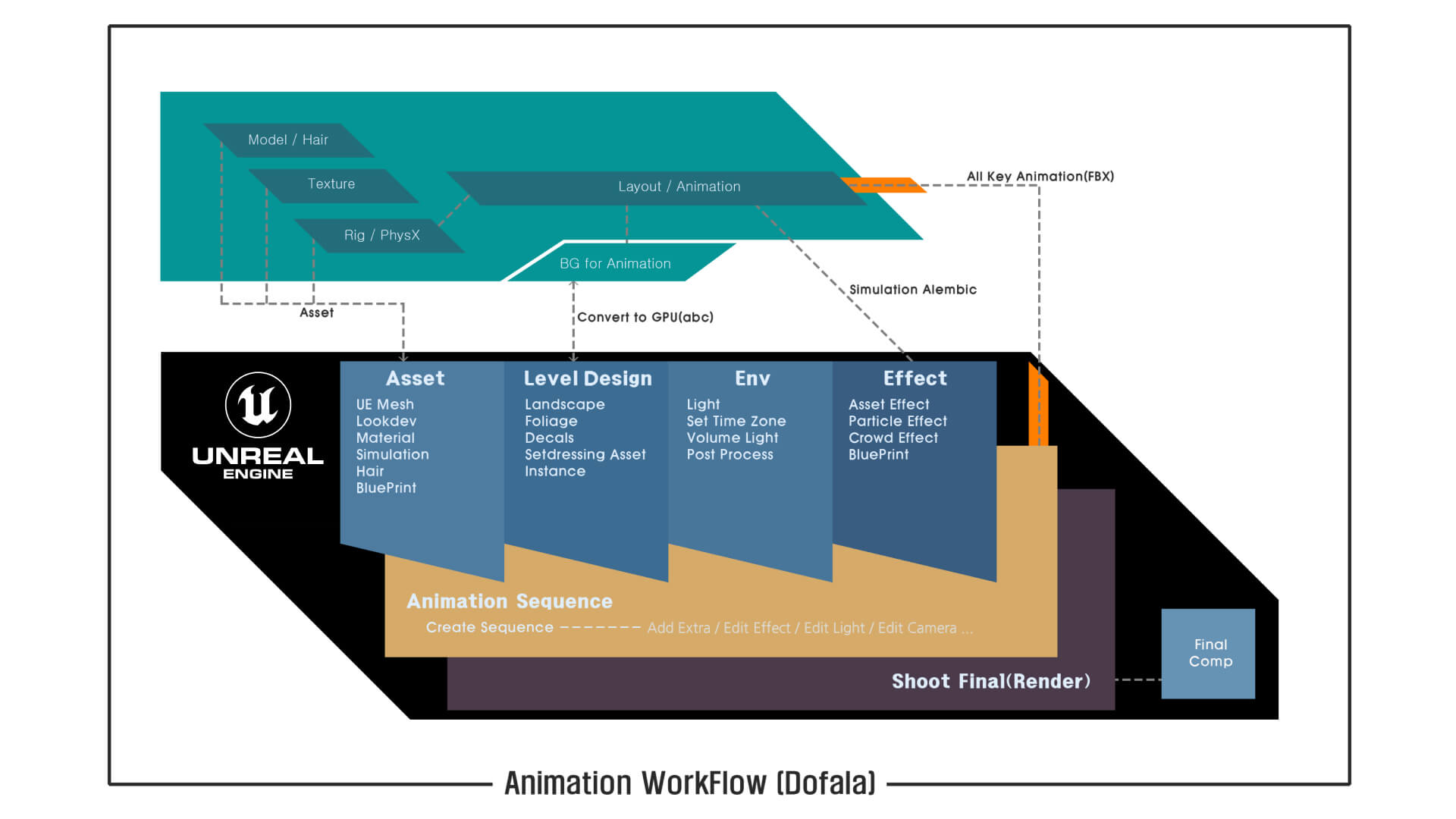

Dofala’s real-time animation workflow

© RONGJUN, DOFALA All rights reserved.

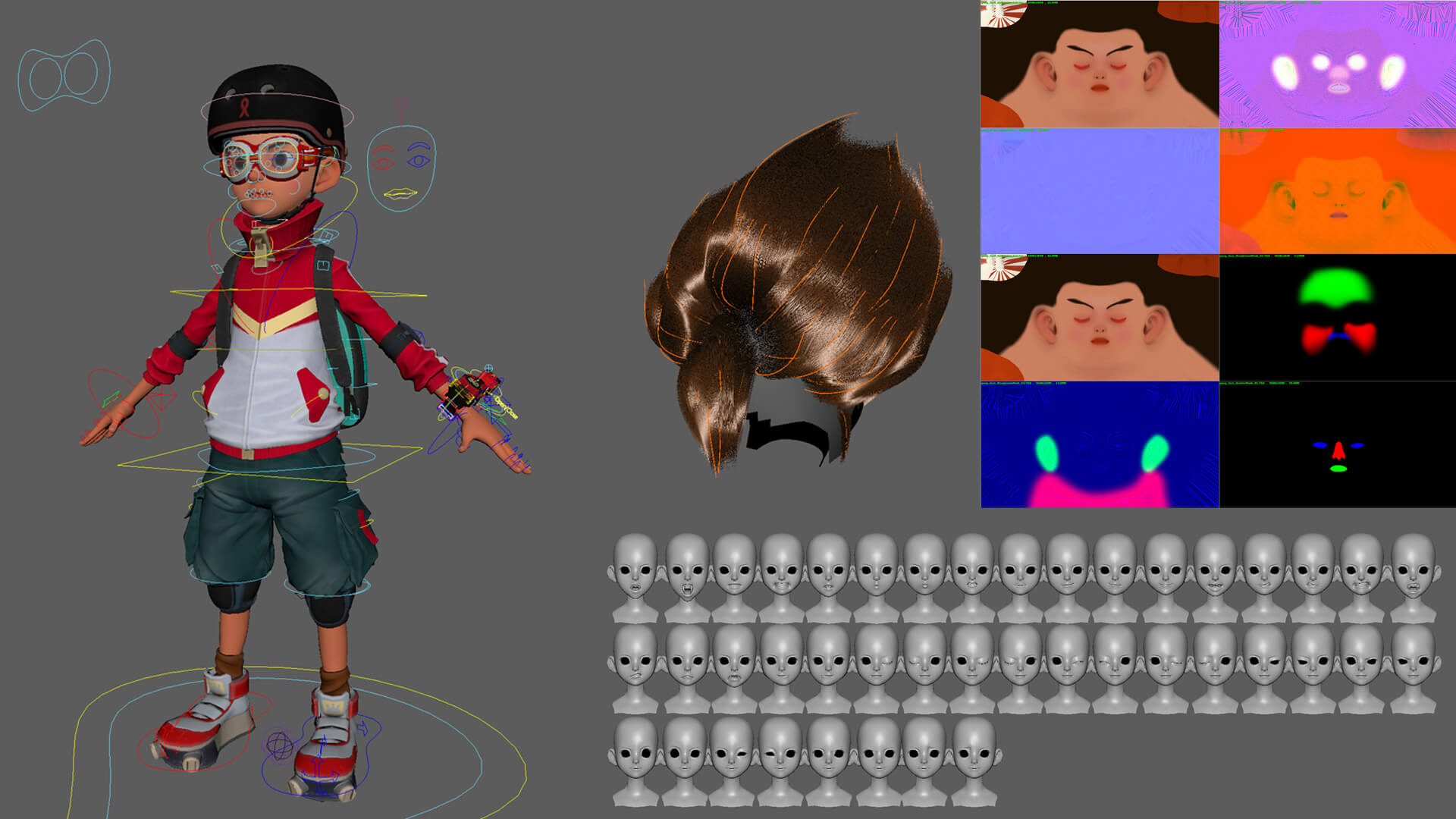

As much as possible, Dofala wanted to do things in engine and immediately found a great bridge between their modeling, texturing, rigging, and hair pipelines, and the data processing that enabled them to take full advantage of Unreal Engine’s feature set. When creating modeling data, Dofala wanted to build a FBX-based pipeline using skinning and blend shapes, so they didn’t have to rely on Alembic. Although this meant some of their traditional techniques couldn’t be used, the benefits gained from the real-time processes—smaller data capacity, higher frame rates and efficiency—significantly outweighed the negatives.

© RONGJUN, DOFALA All rights reserved.Asset produced to be compatible with Unreal Engine

For rigging, the team used Control Rig to produce rigs that were compatible with their 3D DCC application and Unreal Engine. This enabled them to edit animations in engine and deliver them back to the DCC (and vice versa), removing the one-way process that limits traditional productions. All key data could also be linked, so no matter where it went (importing/exporting) or how characters were edited, the original data would remain intact.

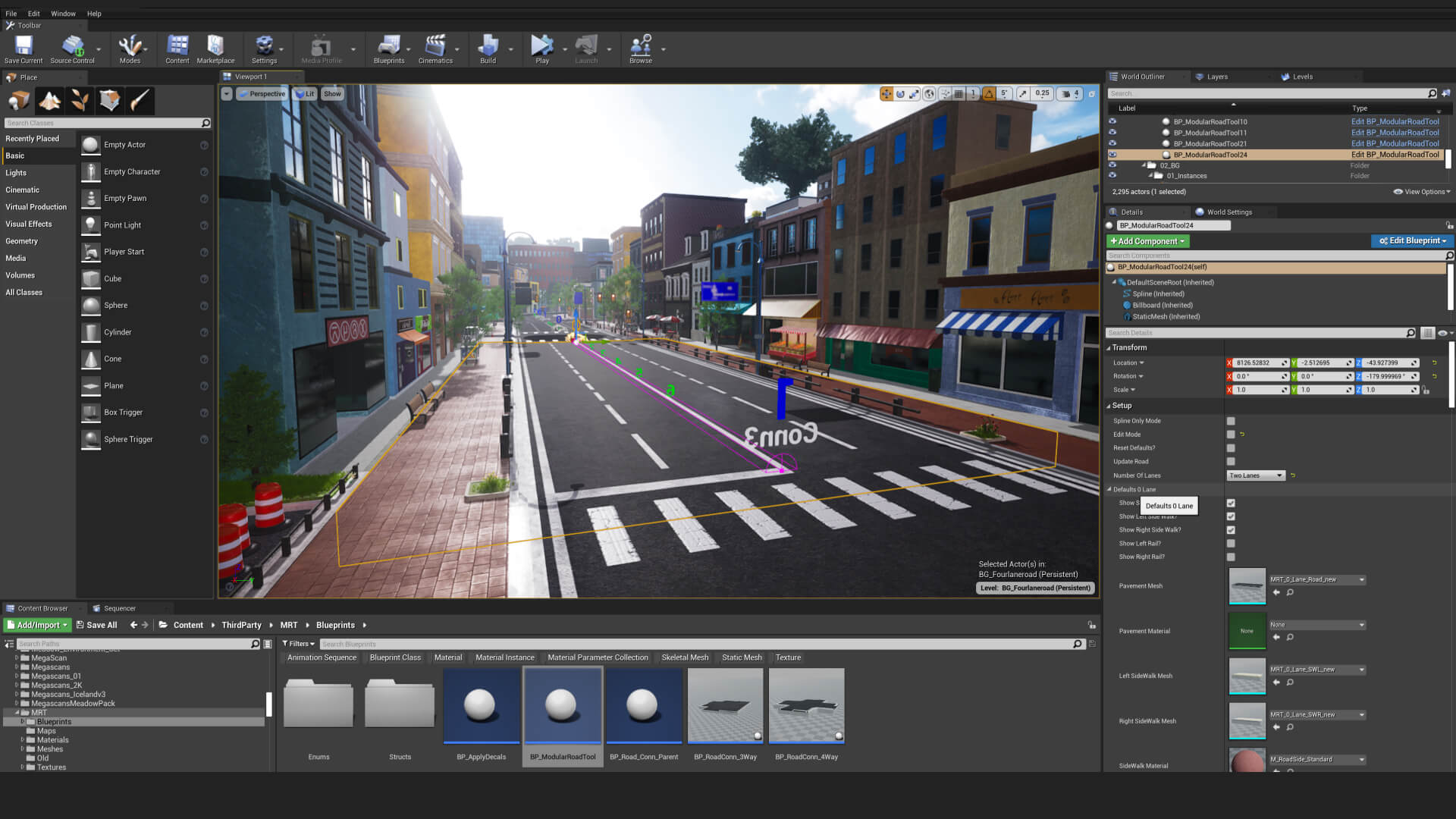

In terms of environments, Dofala designs everything as levels within Unreal Engine. With this method, the team can produce/edit a much larger volume of environments than they ever could in their DCC, helping them move fast and produce the same high-quality assets needed to wow viewers.

© RONGJUN, DOFALA All rights reserved.Environment level produced in Unreal Engine

When it came to animation keyframing, the DCC couldn’t (natively) keep up with the environment levels being used in Unreal Engine, leading to truncated scenes and dummy data. Wanting a smoother experience, Dofala began dividing the real-time environments, exporting them as FBX, and then re-importing them back into the DCC. From there, they could convert them into GPU cache data, opening the full experience and the high-frame rates they had quickly grown accustomed to.

© RONGJUN, DOFALA All rights reserved.Environment level imported to DCC tool using GPU cache data for animation work

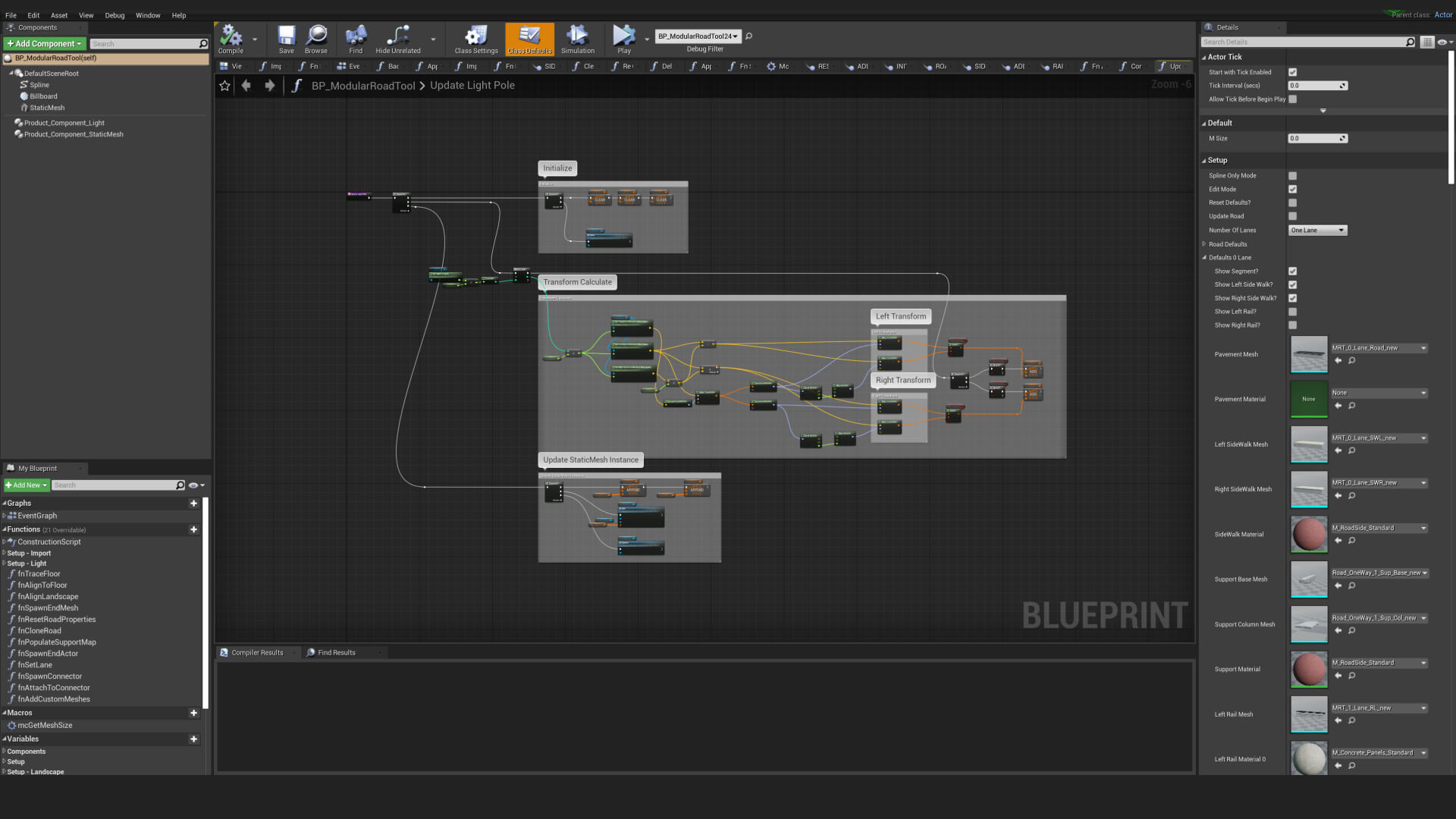

The Blueprints of real-time animation

So what features do Dofala lean on when it comes to real-time animation?

To start, the Blueprint visual scripting system, which helps animation teams automate and/or develop specific processes that save them time and money. For instance, when Dofala needed several Niagara settings adjusted at once, they made some Blueprints. When animating assets, the team used Blueprints to procedurally add animations, so assets could be reused without having to keyframe in the DCC. Dofala utilized it again to process various level-building tasks, physics simulations, and crowd shots.

And the best part? The artists didn’t have to become coding experts to do it. They created all their scripts and tools without any programming support, helping them build the efficient pipeline they saw in their heads, in the most intuitive way possible.

© RONGJUN, DOFALA All rights reserved.

© RONGJUN, DOFALA All rights reserved.Modular road produced with Blueprints

Another essential feature was Sequencer, Unreal Engine’s multi-track editor. At the core of Dofala’s real-time animation pipeline, Sequencer enabled the easy adjustment of features/parameters for the team’s plugins and actors as they set up their shots.

©RONGJUN, DOFALA All rights reserved.

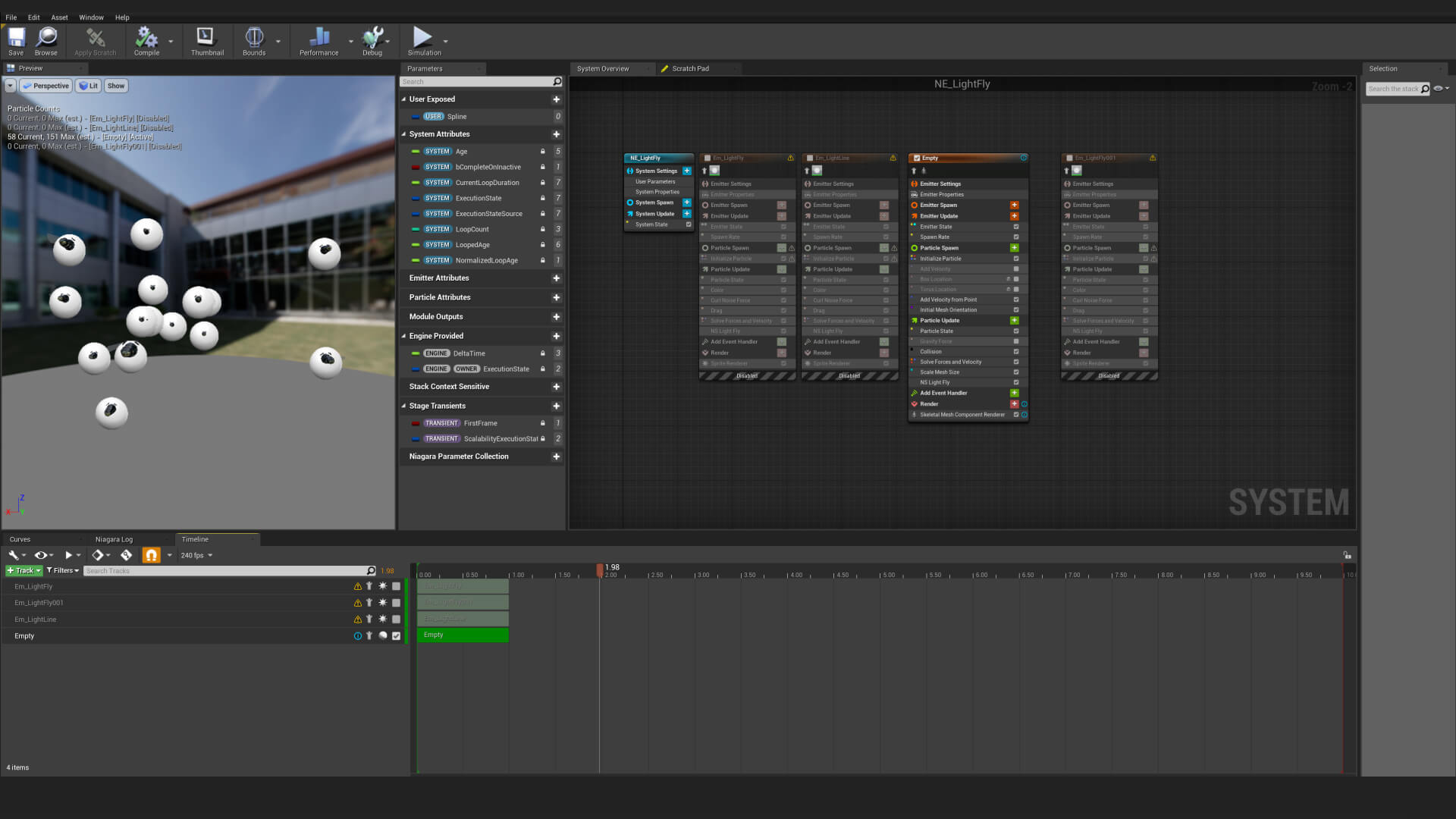

©RONGJUN, DOFALA All rights reserved.Controlling the firefly effect’s particle movements using Niagara

Also, using the programmable Niagara VFX tool, the team created various interactions that simple effect assets cannot create, as well as events that could create timed effects linked to simulations like wind and gravity. It was especially useful for creating a water plugin that produced flotation, air bubbles, and ripple effects that could not only understand where the water’s surface was, but make sure each particle reacted in the right way to timed events.

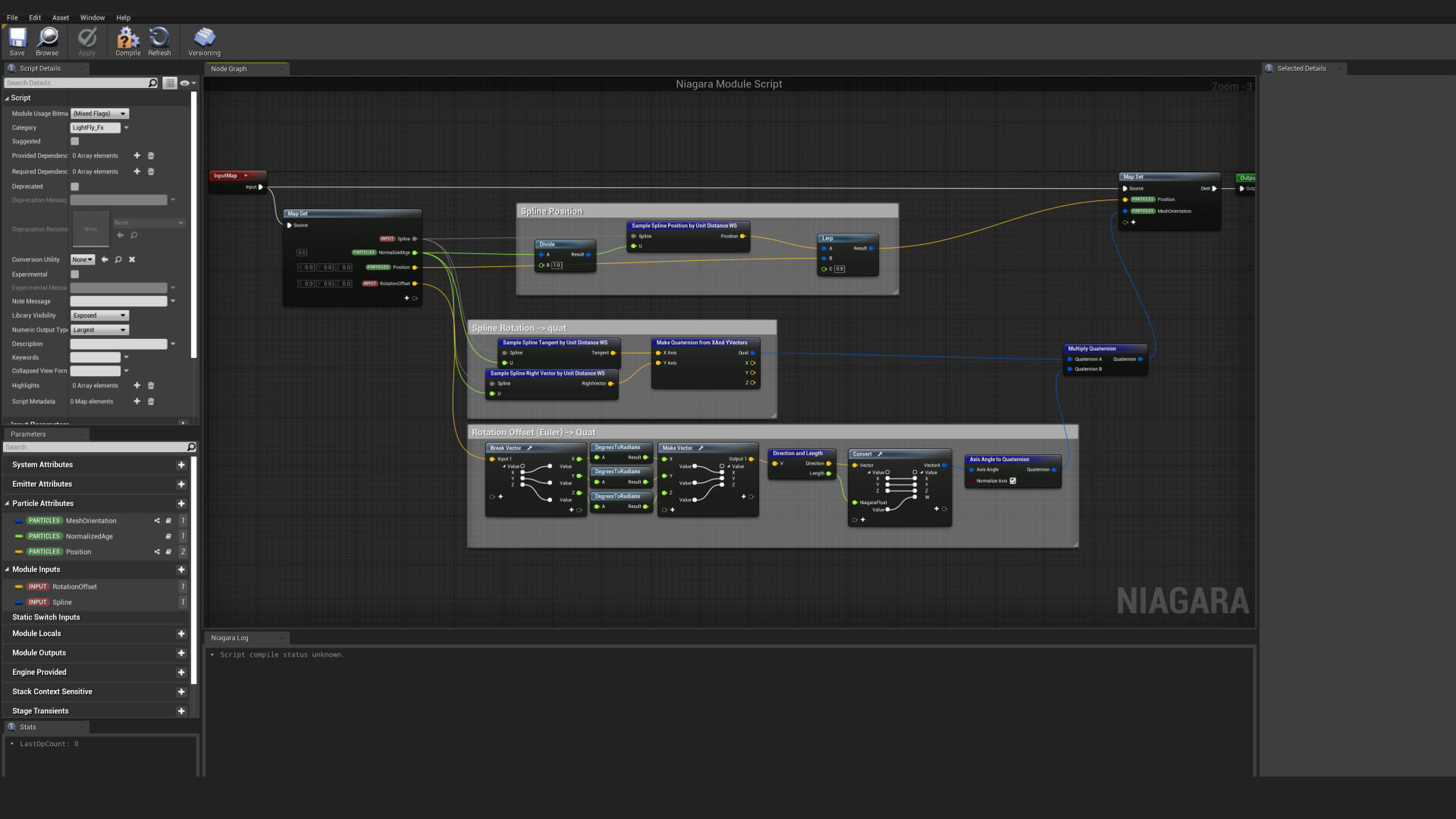

Niagara was used again on electrical effects, encompassing everything from volts shooting across a wire to chaotic beams radiating from a box. The former was created with the help of spline tracking. Dofala used the Spline Component as an input to get the normal and direction vectors, before using them as their particle transform standard for noise.

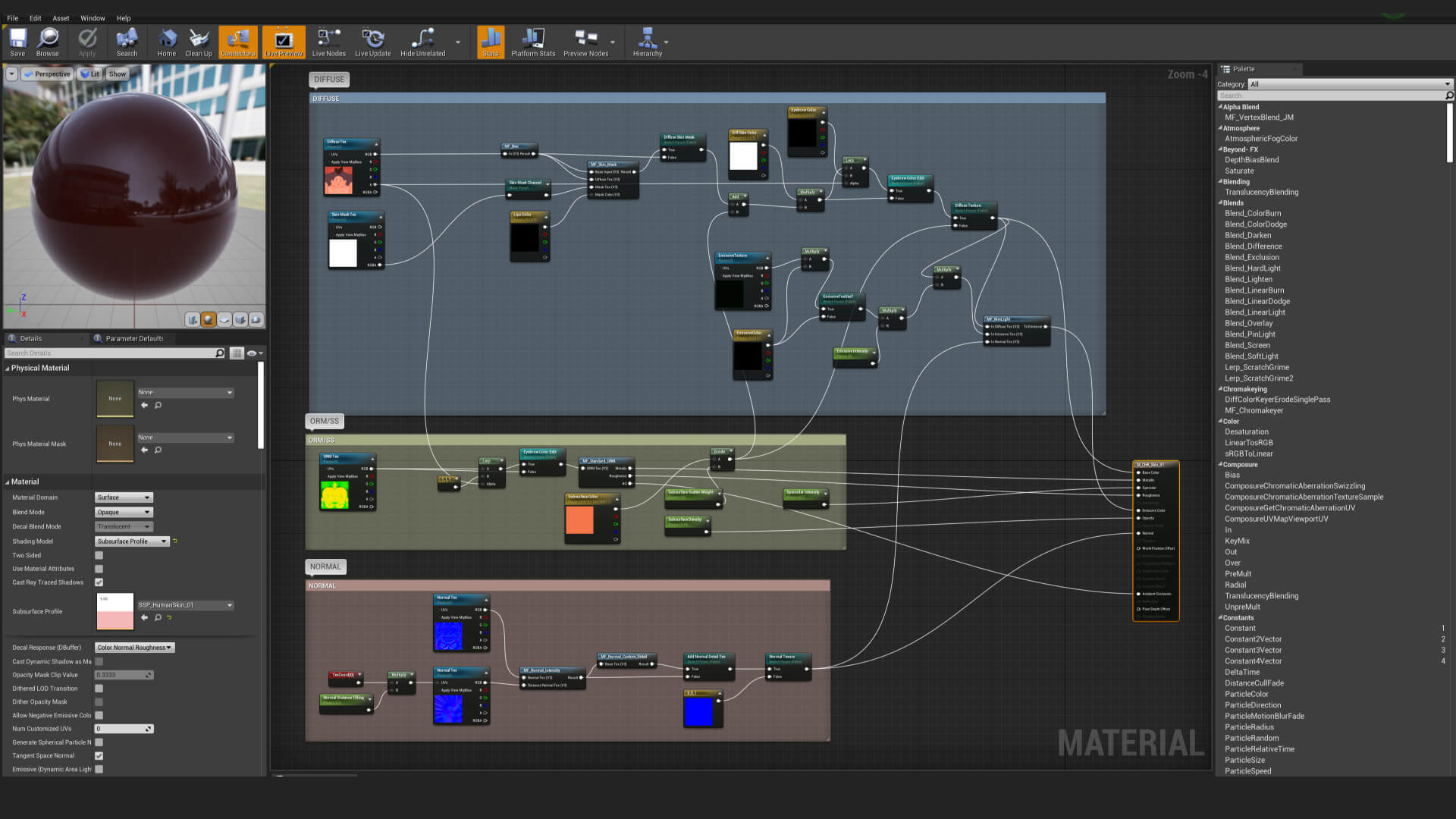

The Material Editor was used to create and simply apply most of the shaders with node configurations, without HLSL Scripting, for dissolve effects. In addition to this, the Sobel filter was used to create colorful outlines that would appear when lights were close; fisheye lens effects were applied by applying a UV distortion to scene color; and the Poseable Mesh was used for an eye-catching afterimage effect that saw a robot speed-streaking across a field.

© RONGJUN, DOFALA All rights reserved.Skin Material implemented with Material Editor

The value of real-time productions

In less than a year, Dofala’s real-time workflow has changed everything for the team, providing some needed relief from the bottlenecks of traditional animation. What used to take hours or days to realize is now happening in the moment, helping Dofala hit the accelerated timelines of a fixed TV schedule—all without reducing the quality of any asset or post-processing changes that come up in reviews.

Because Dofala can always see potential final pixels on screen, they can review their work together and apply feedback in real time. When editing is required, the production can add any additional modeling, make immediate camera changes, or freely modify the shape and color of assets, while maintaining the existing work.

The new pipeline also opened a valuable tool for an ambitious firm like Dofala: transmedia content. Any assets produced within this real-time workflow can always be reused as digital assets across mediums, including VR and AR games. Dofala is actually making a corresponding game called DinoPowers AR Combat with the assets right now, pushing the company into a whole new zone. We can’t wait to see what happens next!

熱門頭條新聞

- The Ministry of Education encourages the inclusion of artificial intelligence education in local and school-based curricula.

- The OpenHarmony 5.0 Release version has been officially released.

- China’s Minidramas Make Big Splash

- DevGAMM Lisbon 2024 celebrates another successful edition with more than 750 attendees from around the world

- Wait What’s That – A VRy Unique Take On A Classic Drawing Game – Out Now For Free On Meta Quest!

- Slow-Motion Collapse: How Nostalgia, Streaming, and Short-Sightedness Undermined Hollywood’s Future

- PBS NOVA / GBH JOINS THE PRODUCTION OF ZED AND ARTE’S PREMIUM DOCUMENTARY THE LOST TOMBS OF NOTRE-DAME

- Biopunk Action Title Sonokuni Launching Early 2025