Achieving Top Inference Performance

Achieving Top Inference Performance with the NVIDIA H100 Tensor Core GPU and NVIDIA TensorRT-LLM

Best-in-class AI performance requires an efficient parallel computing architecture, a productive tool stack, and deeply optimized algorithms. NVIDIA released the open-source NVIDIA TensorRT-LLM, which includes the latest kernel optimizations for the NVIDIA Hopper architecture at the heart of the NVIDIA H100 Tensor Core GPU. These optimizations enable models like Llama 2 70B to execute using accelerated FP8 operations on H100 GPUs while maintaining inference accuracy.

At a recent launch event, AMD talked about the inference performance of the H100 GPU compared to that of its MI300X chip. The results shared did not use optimized software, and the H100, if benchmarked properly, is 2x faster.

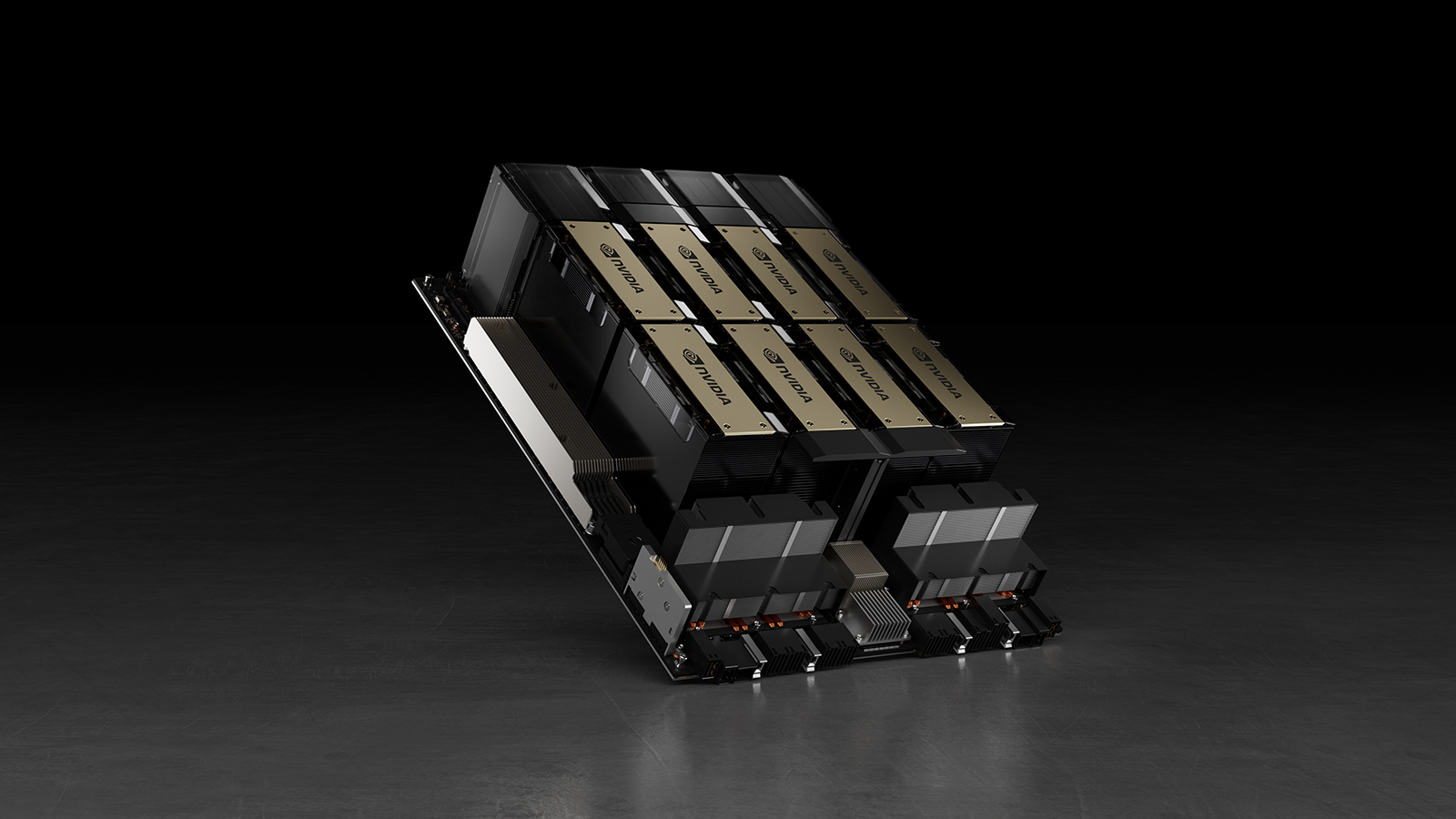

The following is the actual measured performance of a single NVIDIA DGX H100 server with eight NVIDIA H100 GPUs on the Llama 2 70B model. This includes results for both “Batch-1” where an inference request is processed one at a time, as well as results using fixed response-time processing.

Chart of Llama 2 70B server inference performance in queries.

Figure 1. Llama 2 70B server inference performance in queries per second with 2,048 input tokens and 128 output tokens for “Batch 1” and various fixed response time settings

AMD’s implied claims for H100 are measured based on the configuration taken from AMD launch presentation footnote #MI300-38. Using vLLM v.02.2.2 inference software with NVIDIA DGX H100 system, Llama 2 70B query with an input sequence length of 2,048 and output sequence length of 128. They claimed relative performance compared to DGX H100 with 8x GPU MI300X system.

For NVIDIA measured data, DGX H100 with 8x NVIDIA H100 Tensor Core GPUs with 80 GB HBM3 with publicly available NVIDIA TensorRT-LLM, v0.5.0 for batch 1 and v0.6.1 for latency threshold measurements. Workload details same as footnote #MI300-38.

DGX H100 can process a single inference in 1.7 seconds using a batch size of one—in other words, one inference request at a time. A batch size of one results in the fastest possible response time for serving a model. To optimize both response time and data center throughput, cloud services set a fixed response time for a particular service. This enables them to combine multiple inference requests into larger “batches” and increase the overall inferences per second of the server. Industry-standard benchmarks like MLPerf also measure performance with this fixed response time metric.

Small tradeoffs in response time can yield x-factors in the number of inference requests that a server can process in real time. Using a fixed 2.5-second response time budget, an 8-GPU DGX H100 server can process over five Llama 2 70B inferences per second compared to less than one per second with batch one.

AI is moving fast and the NVIDIA CUDA ecosystem enables us to optimize our stack quickly and continuously. We look forward to continuing to improve AI performance with every update of our software, so be sure to check out our performance pages and GitHub sites for the latest.

Source:Dave Salvator and Ashraf Eassa/NVDIA

熱門頭條新聞

- Hong Kong Pavilion First Show in China International Cartoon & Animation Festival

- 2024 Hong Kong Animation and Video Game Festival

- Revolutionizing Legal Work: How Robin AI Accelerates Contract Review

- TyFlow brings Stable Diffusion AI directly into 3ds Max

- Gamescom 2024

- TRANSFORMERS: GALACTIC TRIALS HEADS TO CONSOLES AND PC THIS OCTOBER

- STAKE YOUR CLAIM IN KINGDOM, DUNGEON, AND HERO OUT NOW ON STEAM

- MBC’s Shahid platform announces premiere of ‘Grendizer U’