Responsible AI and enhanced model training at Unity

Unity Muse helps you explore, ideate, and iterate with powerful AI capabilities. Two of these capabilities are Texture and Sprite, which transform natural language and visual inputs into usable assets.

Introducing AI into the Unity Editor with Muse offers you the option to realize your vision more easily by being able to quickly transform ideas into something tangible. You can also adjust and iterate with text prompts, patterns, color and sketches that can transform into real and project-ready outputs.

In order to provide useful outputs that are safe, responsible, and respectful of other creators’ copyright, we challenged ourselves to innovate in our training techniques for the AI models that power Muse’s sprite and texture generation.

In this blog post, we share how Muse generates results, unpack our model training methodologies, and introduce our two new foundation models.

Training AI models

As we debut Muse’s Texture and Sprite capabilities, we are also pioneering two bespoke diffusion models, each trained from scratch on proprietary data that is Unity owned or licensed.

Expanding our library of owned content

One key technique we employ to enhance the scale and variety of our datasets is data augmentation, which allows us to produce many variations from original Unity-owned data samples. This significantly enriches our training sets and enhances the models’ ability to generalize from limited samples. We also utilize techniques like geometric transformations, color space adjustments, noise injection, and sample variations with generative models, such as Stable Diffusion, to synthetically expand our dataset.

Recently, Stable Diffusion has been the subject of ethical concerns because the model was originally trained on data scraped from the internet. We limited our reliance on pretrained models as we built Muse’s Texture and Sprite capabilities by training a latent diffusion model architecture from scratch, on original datasets that Unity owns and has responsibly curated. By using the Stable Diffusion model minimally as part of our data augmentation techniques, we were able to safely leverage this model to expand our original library of Unity-owned assets into a robust and diverse repository of outputs that are unique, original, and do not contain any copyrighted artistic styles. We also applied additional mitigations on top of this that we’ll describe below. Our training datasets for the latent diffusion models underpinning Muse’s Texture and Sprite capabilities do not comprise any data scraped from the internet.

Below are some examples of content expanded through the augmentation techniques described above.

A sample of original data (top left) and resulting synthetic variations obtained through a mix of augmentation techniques, both perturbation-based (color space adjustments, top to bottom) and generation-based (left to right).

A sample of original data (top left) and resulting synthetic variations obtained through a mix of augmentation techniques, both perturbation-based (color space adjustments, top to bottom) and generation-based (left to right).

Further samples of original data (left columns) and their resulting synthetic variations.

After augmenting our existing data, there were still gaps in a range of subjects that we needed to fill. To do this, we trained Stable Diffusion on our own content until its behavior was significantly changed. Using these derivative models, we created entirely new synthetic data using a prefiltered list of subjects. The list of subjects went through both human review and additional automated filtering using a large language model (LLM) to ensure we did not attempt to create any synthetic images that would violate our guiding principles and go against what we were trying to achieve: a dataset completely devoid of recognizable artistic styles, copyrighted materials, and potentially harmful content.

The result was two large datasets of both augmented and fully synthetic images, which we had high confidence would not contain unwanted concepts. However, as confident as we were, we still wanted to add even more filtering to ensure our models’ safety.

Additional data filtering for safe and useful outputs

Since our main priorities were safety, privacy, and ensuring our tools help you without negative impacts, we developed four separate classifier models that were responsible for additional dataset filtering. These models helped ensure that all content contained in the dataset met the standards we set with our guiding AI principles, as well as additional checks for image quality.

Together, the reviewer models were responsible for determining that synthetic images:

- Did not contain the features of any recognizable human

- Did not contain any non-generic artistic styles

- Did not contain any IP characters or logos

- Were of an acceptable level of quality

If an image did not pass the high confidence threshold required by any of the four reviewer models, it was discarded from our dataset. We decided to err on the side of caution and weighted our models towards rejection so that only the images with the highest confidence would pass the filters and make it into the final dataset.

Introducing our models: Photo-Real-Unity-Texture-1 and Photo-Real-Unity-Sprite-1

At Unite, we announced early access for Muse’s Texture and Sprite capabilities. The first iterations of the models that power these tools are internally referred to as Photo-Real-Unity-Texture-1 and Photo-Real-Unity-Sprite-1. These models are designed to only have a basic understanding of stylization and are primarily focused on photorealism.

Additionally, if you want to guide the models to match an existing style in your project, you can teach our models how to create content in a specific art style by providing our style training system a handful of the your own reference assets. This creates a small secondary model that works in tandem with the main model to guide its outputs. This small secondary model is private to you or your organization as its trainers, and we will never use this content to train our main models.

Because our models focus on photorealism, we did not have to train our main models on countless different styles. This architecture makes it easier to train the main models while maintaining our commitment to responsible AI and, at the same time, giving you a deep level of artistic control.

These models today are just the beginning. We expect Muse to continue getting smarter and to provide better outputs, and we’ll be shepherding the models on this path with our model-improvement roadmaps.

Photo-Real-Unity-Texture-1 roadmap

Sample outputs from our first version of Photo-Real-Unity-Texture-1. From left to right: metal slime, blue crystal glass rocks, red fabric, bear fur

At the moment, our texture model is quite capable all around. It knows a significant amount of concepts, and you can freely mix completely unrelated concepts and achieve beautiful results, such as “metal slime” or “blue crystal glass rocks,” as shown above.

While the model is quite capable in its current state, after learning how it responds to different prompts and input methods, we’ve observed that it may be difficult to achieve advanced material concepts with single-word prompts. There are additional methods to help guide the model to reach your vision, but we want to continue giving you more control, both in terms of basic prompts’ accuracy and by adding new methods of guiding the model.

In the future, we plan to add a color picker, additional premade guidance patterns, an improved system for creating your own guidance patterns, and other new methods of visual input, which we’re currently experimenting with.

Going forward, our primary focus for Photo-Real-Unity-Texture-1 is to identify any weak material concepts and continue to improve the overall quality and capability through frequent retraining of the model. Your feedback through the in-tool rating system is critical to helping us build the best tool we can by helping us identify weak points in the model’s capabilities. Combined with our frequent training schedule, we are rapidly improving the model, making it easier to use and more knowledgeable of the material world.

Photo-Real-Unity-Sprite-1 roadmap

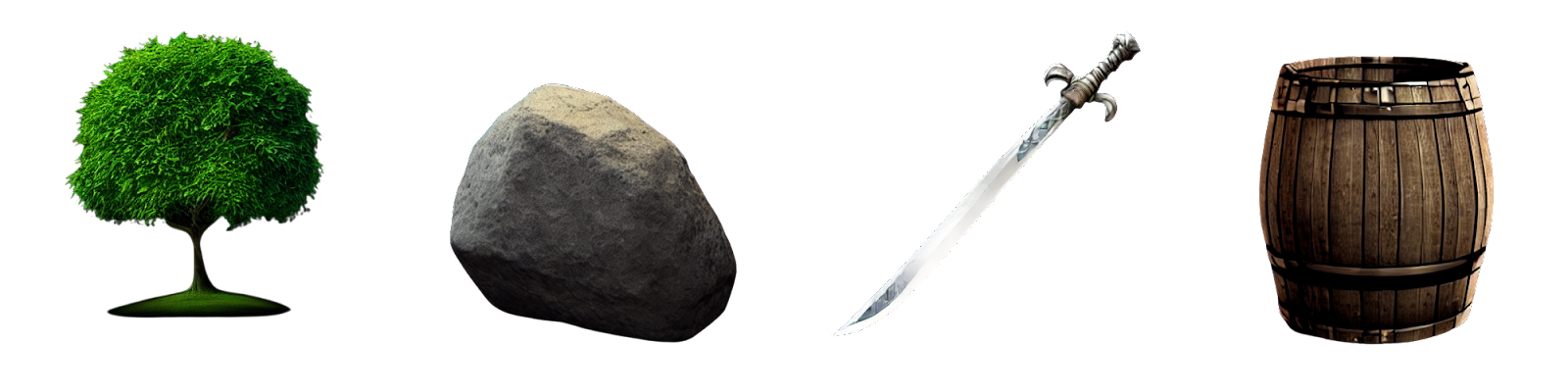

Sample outputs from our first version of Photo-Real-Unity-Sprite-1. From left to right: a green tree, a boulder, a sword, a barrel

Similar to Photo-Real-Unity-Texture-1, our foundational sprite model is overall very capable and knows many concepts. As the tool does not yet have built-in animation capabilities, we chose to focus our initial efforts on maximizing the quality of the most commonly used static sprite concepts. You can see the raw outputs of the base model in the image above. In normal use, these would be guided by a user-trained model to match a specific art style.

While static objects are quite reliable already, we’re still working on improving the anatomical accuracy of animals and humans. It’s possible to get good results on these types of subjects, however you may encounter cases of extra or missing limbs or distorted faces. This is a side effect of our commitment to responsible AI and having strict limitations on what data can be used. We’re taking privacy and safety seriously, even at the expense of quality for some subjects in our initial early access release.

This is a side effect of our commitment to responsible AI and having strict limitations on what data can be used. We’re taking privacy and safety seriously, even at the expense of quality for some subjects in our initial early access release.

You may also experience cases where a generated sprite is completely blank. This is caused by our visual content moderation filter. We have chosen to be overly cautious during our initial launch when it comes to output filtering on Photo-Real-Unity-Sprite-1, and, as a result, some art styles may trigger false positives on the filter. We intend to ease the restrictions over time as we continue to receive your feedback and improve our content filter.

We expect the quality of all subjects across the board to rapidly increase as we get feedback and continue to source more data responsibly. We intend to put Photo-Real-Unity-Sprite-1 through a similarly rigorous training schedule to Photo-Real-Unity-Texture-1.

Unity’s responsible path to AI-enhanced development

Unity Muse is our first step toward bringing greater creative control to our community with the power of generative AI in the most responsible and respectful way possible. We’ve built this product with a user-first focus, and we aim to continue to change and improve based on your feedback.

We recognize the potential impact of generative AI on the creative industry, and we take it very seriously. We have taken our time in developing these tools to ensure that we are not replacing creators but instead enhancing your abilities. We believe the world is a better place with more creators in it, and with Unity Muse and the models that power it, we continue to support this mission.

By:Sylvio Drouin/Unity

熱門頭條新聞

- Hong Kong Pavilion First Show in China International Cartoon & Animation Festival

- 2024 Hong Kong Animation and Video Game Festival

- Revolutionizing Legal Work: How Robin AI Accelerates Contract Review

- TyFlow brings Stable Diffusion AI directly into 3ds Max

- Gamescom 2024

- TRANSFORMERS: GALACTIC TRIALS HEADS TO CONSOLES AND PC THIS OCTOBER

- STAKE YOUR CLAIM IN KINGDOM, DUNGEON, AND HERO OUT NOW ON STEAM

- MBC’s Shahid platform announces premiere of ‘Grendizer U’