Real-time, Ray Tracing, and Level Ex

Headquartered in Chicago, the Level Ex team is made up of video game developers who previously worked for game giants like Activision, EA, Microsoft, LucasArts, and more. Now, these experts apply their full toolbox of skills in game design, engineering, and graphics toward making games for medical professionals that help improve physician skills. Level Ex accelerates the adoption curve in healthcare in a way that has never been done before.

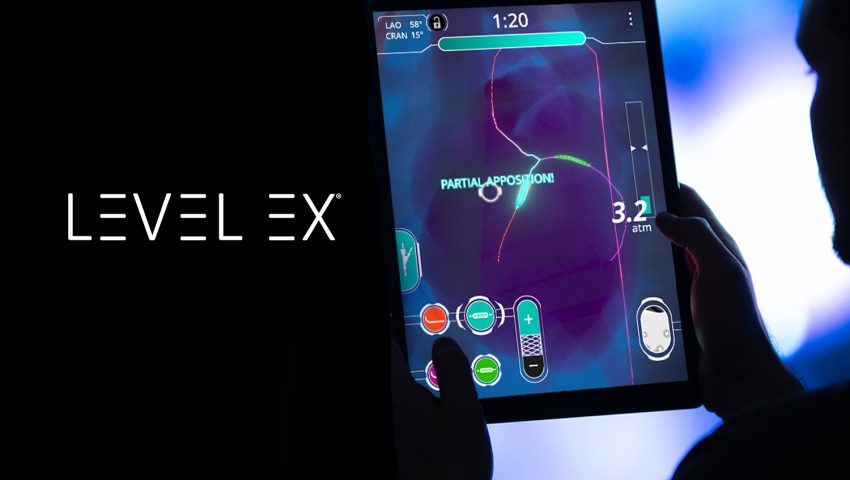

During SIGGRAPH 2019, two members of the Level Ex team demoed “Marching All Kinds of Rays…On Mobile” as part of Real-Time Live! We sat down with CEO and Founder Sam Glassenberg to learn more about the demo and what the team is up to now.

SIGGRAPH: Let’s start at a high level. What is unique about your work at Level Ex in terms of real-time technology?

Sam Glassenberg (SG): We’re using real-time technology to capture the challenges of the practice of medicine with video game mechanics. To do that right — and capture bleeds as well as surgical and clinical challenges — you need to recreate realistic fluids and soft tissues. We do that not only under visible light, but under ultrasound, X-ray, and all of these other imaging modalities that have all sorts of quirky physics and behave in different ways. What we’re doing is recreating that in real time.

At this point, we have over 600,000 medical professionals playing our games, and they’re playing them on mobile. It’s not just a matter of recreating or simulating interventional cardiology under X-ray or the other example that we showed at SIGGRAPH (interventional pulmonology), it’s a matter of making it work on the iPhone GPU. You know, that thing that drops calls all the time. [laughs] In the last two years, we’ve managed to be on a stage like Real-Time Live! and show some crazy cool stuff, like realistic fluids that mix and interact with soft tissue environments. Plus, we have been one of the only projects demoed live from a phone.

SIGGRAPH: Walk us through the technology behind your demo of Cardio Ex.

SG: In our demo, we walked through three examples of ray marching being applied to capture interesting, challenging cases in cardiology and pulmonology. The first example was ray marching X-ray. Interventional cardiology is a specialty where you’re literally navigating metal wires through the vasculature of a live, beating human heart. That procedure is done entirely under X-ray, so you’re playing the entire game visualized as a shadow being cast on an X-ray plate. We developed all sorts of techniques to recreate how these tissues behave and look under X-ray, including things like when you inject radio opaque dye into the patient’s circulatory system so you can actually see the maze of arteries that you’re trying to navigate through.

The second example was ray marching ultrasound waves. These things move at the speed of sound, not the speed of light. You’re literally simulating sound waves as opposed to X-rays or light waves. Sound waves, in some ways, behave similarly to light, such as the fact that they’ll cast shadows. But, in other ways, they act very differently, such as the fact that they will actually change speed through different mediums. This creates all sorts of interesting artifacts that you only see when you’re looking at a picture generated by sound waves.

Then, the last example was using ray marching to create more realistic mucus in the lungs. For this, we used ray marching through signed distance fields to create realistic-looking, flat, refractive, viscous fluids.

SIGGRAPH: How is ray marching different from ray tracing?

SG: The two are very closely related. In fact, ray tracing hardware can also accelerate ray marching. With ray marching, you’re basically moving a ray step-by-step through a series of different media, whereas ray tracing is generally focused on how those rays interact with surfaces — reflecting, refracting, occluding. We had to figure out how to make algorithms work without a normal GPU. With dedicated ray tracing hardware you can do crazy, amazing things.

SIGGRAPH: What inspired Level Ex’s focus on technology for healthcare?

SG: I’ve spent my career in the video game industry. I started out at LucasArts making Star Wars games, and was also on the DirectX Graphics team at Microsoft. But, I come from a family of doctors. My grandfather was actually the senior editor of JAMA, The Journal of the American Medical Association. So, that’s where I come from. I’m kind of the “black sheep” of the family, who never went into medicine. Years ago, as a favor to my father, I made a mobile game for him to train his residents on how to do a fiberoptic intubation. I made it pretty quickly and threw it into the App Store so his colleagues could download it. When I checked two years later, it had rallied an audience of 100,000. At that point I thought, “Oh, clearly there’s demand for this type of game. What if we actually took top video game developers from around the industry, folks who’ve worked on everything from ‘Halo’ to ‘Mortal Kombat,’ and put them on the challenge of creating games for doctors?”

SIGGRAPH: Ray tracing hardware was a major aspect of your demo. Where do you see the technology going in the future?

SG: We’re very excited about the capabilities. First of all, we’re excited about the possibility of getting some of these more advanced algorithms running on mobile. Second, we also do VR development — we actually demoed some of our VR content at SIGGRAPH 2018 — and we’re excited about the possibility of applying ray tracing there. Finally, utilizing ray tracing in our tool chain for light baking and to help artists make more realistic, better-looking content.

SIGGRAPH: What’s difficult or unique about design for mobile GPUs?

SG: In general, what we see is the hardware comes out first on PC to enable PC games. And, eventually, years later, makes its way onto mobile devices. We are excited to jump on it as soon as it does, but we’re also excited to use those PC technologies when we’re creating VR experiences that are tethered to PC.

SIGGRAPH: What has been the most unpredictable effect that technology (old or new) has had on your projects?

SG: In two words: emergent gameplay. When you’re using technology to create any kind of simulation or any kind of realistic environment, you’ll find that fun gameplay emerges from the physics and the graphics. So, when you have an environment where fluids can mix and interact with soft tissues, that opens up into a new range of interesting scenarios you might not have even thought about before. Now, game designers can come up with new, exciting designs built on those technologies.

SIGGRAPH: As a past attendee, what is your favorite memory from a SIGGRAPH conference?

SG: I’ve been going to SIGGRAPH for a long time. My favorite memory has been presenting at Real-Time Live! Getting up in front of 3,000 video game developers, on a stage with the top graphics teams in the world, and presenting our stuff, then coming in second place in audience voting cannot be beat. I have other fond memories, too. In 2004, I actually presented in what was the precursor to Real-Time Live! called “Real-Time Live: Demo or Die.” That year, I think there were over 1,000 people in the audience and they tried voting using laser pointers, so everybody was trying to point in the eye of the presenters. [laughs] I was trying to demo while I couldn’t see anything. The presentation in 2018/2019 was a substantial improvement.

SIGGRAPH: Do you have any advice for future Real-Time Live! submitters or participants?

SG: My advice would be to have fun. It can be very stressful: You’re demoing live and you have only a few minutes. And sometimes you’re demoing, effectively, prototype software that’s still in development. Things crash; things break. You have to do a lot of rehearsal, which can cause the stress to build up and make it feel really intense, especially knowing you’ll be in front of 3,000 of your esteemed colleagues. But, the most important thing is to enjoy and be in the moment. Have fun. Roll with it. It’s a once-in-a-lifetime experience.

Source:Siggraph

熱門頭條新聞

- INTERVIEW: The Winning Formula For “Plankton: The Movie”

- Submissions for 2025 Annecy VR Works Are Open

- ‘Rudy – The Prophecy of the Flying Boy’ Belgian Animation Feature in Development

- Purple Brain Production

- NetEase Games’ Subject Matter Experts to Present Tech Talks at GDC 2025

- Autodesk announces a workforce reduction

- Swedish Methods for Gender Equality Driving Change in the Games Industry

- Jagex announces CEO Transition