Power Your AI Inference with New NVIDIA Triton and NVIDIA TensorRT Features

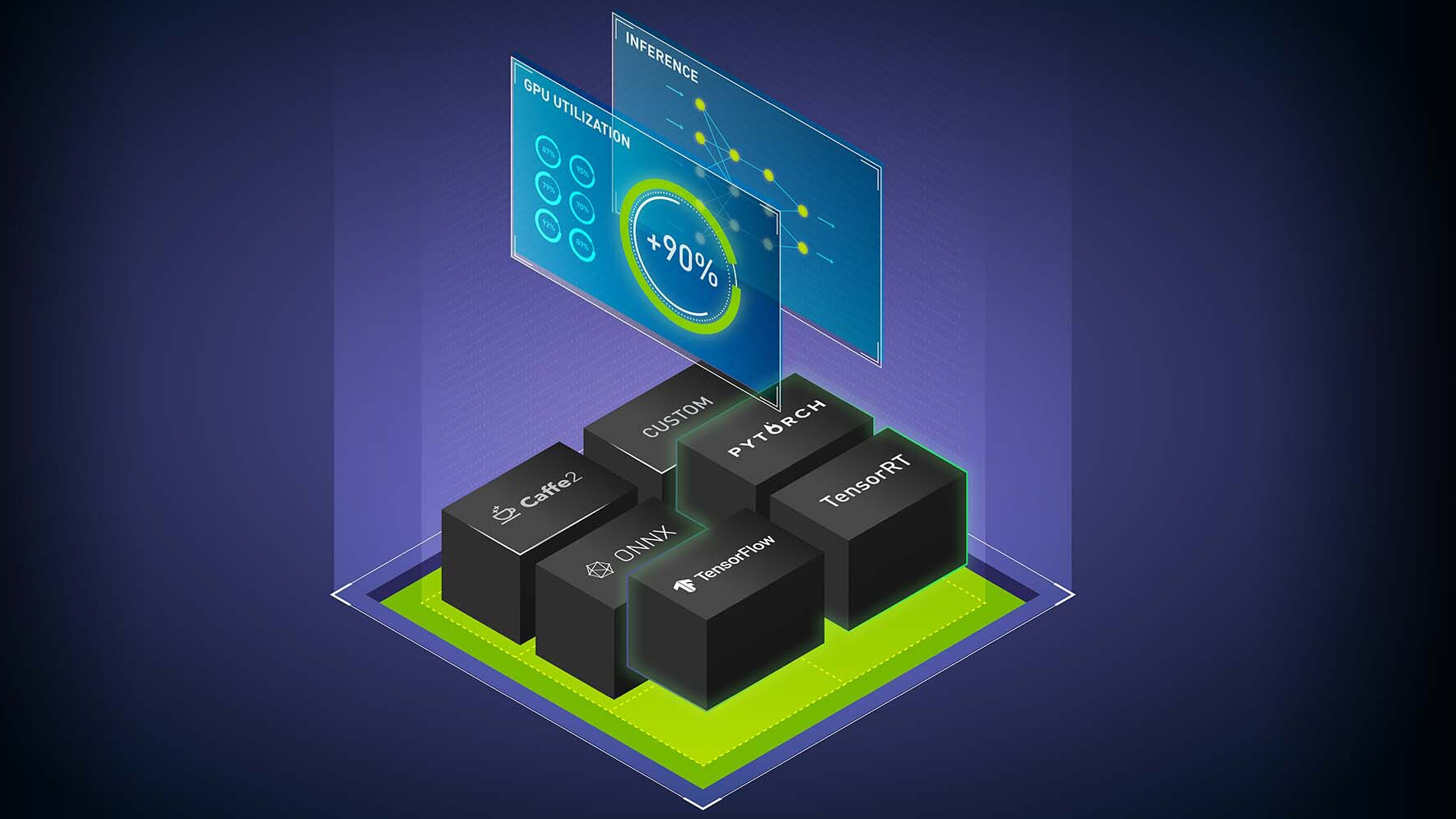

NVIDIA AI inference software consists of NVIDIA Triton Inference Server, open-source inference serving software, and NVIDIA TensorRT, an SDK for high-performance deep learning inference that includes a deep learning inference optimizer and runtime. They deliver accelerated inference for all AI deep learning use cases. NVIDIA Triton also supports traditional machine learning (ML) models and inference on CPUs. This post explains key new features recently added to this software.

NVIDIA Triton

New features in NVIDIA Triton include native Python support with PyTriton, model analyzer updates, and NVIDIA Triton Management Service.

Native Python support with PyTriton

The PyTriton feature provides a simple interface to use NVIDIA Triton Inference Server in Python code. PyTriton enables Python developers to use NVIDIA Triton to serve everything from an AI model or a simple processing function, to an entire inference pipeline.

This native support for NVIDIA Triton in Python enables rapid prototyping and testing of ML models with performance, efficiency, and high hardware utilization. A single line of code brings up NVIDIA Triton, providing benefits such as dynamic batching, concurrent model execution, and support for GPUs and CPUs from within the Python code. This approach eliminates the need to set up model repositories and convert model formats. You can use existing inference pipeline code without modification. To try it, visit triton-inference-server/pytriton on GitHub.

Model analyzer

Model analyzer is a tool that helps find the best NVIDIA Triton model configuration—like batch size, model concurrency, and precision—to deploy efficient inference. You can get to the best configuration in minutes, thanks to the new quick search mode, without having to spend days manually experimenting with configuration parameters.

Now, in addition to standalone models to support modern inference workloads with preprocessing and postprocessing requirements, model analyzer supports model ensembles (also called model pipelines) and multiple model analysis. You can run the model analyzer for the entire ML pipeline. For more information, see the model analyzer documentation.

NVIDIA Triton Management Service

NVIDIA Triton Management Service provides model orchestration functionality for efficient multimodel inference. This functionality, which runs as a production service, loads models on demand and unloads models when not in use.

It efficiently allocates GPU resources by placing as many models as possible on a single GPU server and helps to optimally group models from different frameworks for efficient memory use. It now supports autoscaling of NVIDIA Triton instances based on high utilization from inference and encrypted (AES-256) communication with applications. Apply for early access to NVIDIA Triton Management Service.

NVIDIA TensorRT

New features in TensorRT include multi-GPU multi-node inference, performance and hardware optimizations, and more.

Multi-GPU multi-node inference

TensorRT can be used to run multi-GPU multi-node inference for large language models (LLMs). It supports GPT-3 175B, 530B, and 6.7B models. These models do not require ONNX conversion; rather, a simple Python API is available to optimize for multi-GPU inference. Now available in private early access. Contact your NVIDIA account team for more details.

TensorRT 8.6

TensorRT 8.6 is now available in early access and includes the following key features:

Performance optimizations for generative AI diffusion and transformer models

Hardware compatibility to build and run on different GPU architectures (NVIDIA Ampere architecture and later)

Version compatibility to build and run on different TensorRT versions (TensorRT 8.6 and later)

Optimization levels to trade between build time and inference performance

Customer and partner highlights

Learn how the following new customers and partners use NVIDIA Triton and TensorRT for AI inference.

Oracle AI uses NVIDIA Triton to serve deep learning-based image analysis workloads in OCI Vision. The vision service is used in a variety of use cases, from manufacturing defect inspection to tagging items in online images. Oracle achieved 50% lower latency and 2x throughput with NVIDIA Triton.

Uber leverages NVIDIA Triton to serve hundreds of thousands of predictions per second for DeepETA, the company’s global deep learning-based ETA model.

Roblox, an online experience platform, uses NVIDIA Triton to run all AI models across multiple frameworks to enable use cases like game recommendation, building avatars, content moderation, marketplace ads, and fraud detection. NVIDIA Triton gives the data scientists and ML engineers the freedom to choose their framework: TensorFlow, PyTorch, ONNX, or raw Python code.

DocuSign uses NVIDIA Triton to run NLP and computer vision models for AI-assisted review and understanding of agreements and contract terms. The company achieved a 10x speedup compared to a previous CPU-based solution.

Descript uses TensorRT to optimize models to accelerate AI inferencing. It allows users to replace their video backgrounds and enhance their speech to produce studio-quality content, without the studio.

CoreWeave, a specialized GPU cloud provider, uses NVIDIA Triton to serve LLMs with low latency and high throughput.

NVIDIA inference software delivers the performance, efficiency, and responsiveness critical to powering the next generation of AI products and services—in the cloud, in the data center, at the network edge, and in embedded devices. Get started today with NVIDIA Triton and TensorRT.

By Shankar Chandrasekaran/NVDIA

熱門頭條新聞

- 2024 Developer Showcase and look ahead to 2025

- Jumping Jazz Cats will launch on PC January 30th

- Lexar Announces Two Portable SSDs

- Quell Announces Hit Game Shardfall is Coming to VR

- The films of the Panorama and World Vision at the 11th Duhok International Film Festival were introduced

- GRAND MOUNTAIN ADVENTURE 2 SMASH INDIE MOBILE HIT RETURNING TO THE SLOPES FEBRUARY 6, 2025

- Airships: Lost Flotilla Release Date January 23rd

- BLOOD STRIKE’S WINTER EVENT BRINGS NEW GAME MODES, EXCLUSIVE REWARDS