Expanding AI Agent Interface Options with 2D and 3D Digital Human Avatars

When interfacing with generative AI applications, users have multiple communication options—text, voice, or through digital avatars.

Traditional chatbot or copilot applications have text interfaces where users type in queries and receive text-based responses. For hands-free communication, speech AI technologies like automatic speech recognition (ASR) and text-to-speech (TTS) facilitate verbal interactions, ideal for scenarios like phone-based customer service. Moreover, combining digital avatars with speech capabilities provides a more dynamic interface for users to engage visually with the application. According to Gartner, by 2028, 45% of organizations with more than 500 employees will leverage employee AI avatars to expand the capacity of human capital.1

Digital avatars can vary widely in style—some use cases benefit from photorealistic 3D or 2D avatars, while other use cases work better with a stylized, or cartoonish avatar.

3D Avatars offer fully immersive experiences, showcasing lifelike movements and photorealism. Developing these avatars requires specialized software and technical expertise, as they involve intricate body animations and high-quality renderings.

2D Avatars are quicker to develop and ideal for web-embedded solutions. They offer a streamlined approach to creating interactive AI, often requiring artists for design and animation but less intensive in terms of technical resources.

To kickstart your creation of a photo-realistic digital human, the NVIDIA AI Blueprint on digital humans for customer service can be tailored for various use cases. This functionality is now included with support for the NVIDIA Maxine Audio2Face-2D NIM microservice. Additionally, the blueprint now offers flexibility in rendering for 3D avatar developers to use Unreal Engine.

How to add a talking digital avatar to your agent application

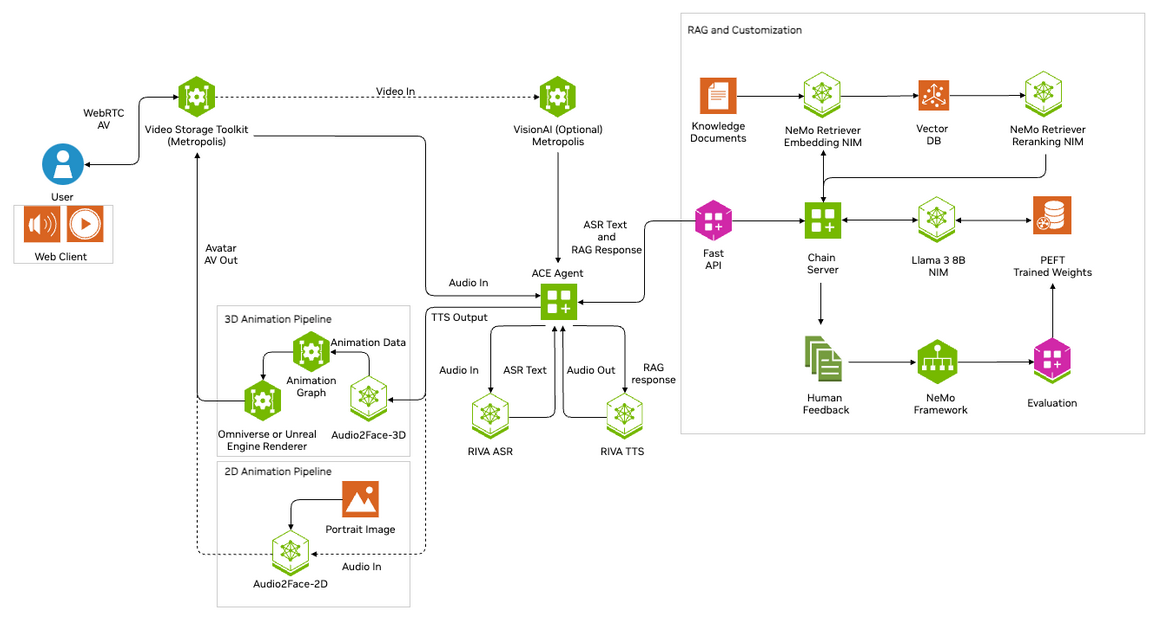

In the AI Blueprint for digital humans, a user interacts with an AI agent that leverages NVIDIA ACE technology (Figure 1).

Diagram illustrating the architecture of the digital human AI Blueprint where a user interacts with an NVIDIA ACE agent that is connected to a RAG pipeline to generate a response.

Figure 1. Architecture diagram for the NVIDIA AI Blueprint for digital humans

The audio input from the user is sent to the ACE agent which orchestrates the communication between various NIM microservices. The ACE agent uses the Riva Parakeet NIM to convert the audio to text, which is then processed by a RAG pipeline. The RAG pipeline uses the NVIDIA NeMo Retriever embedding and reranking NIM microservices, and an LLM NIM, to respond with relevant context from stored documents.

Finally, the response is converted back to speech via Riva TTS, animating the digital human using the Audio2Face-3D NIM or Audio2Face-2D NIM.

Considerations when designing your AI agent application

In global enterprises, communication barriers across languages can slow down operations. AI-powered avatars with multilingual capabilities communicate across languages effortlessly. The digital human AI Blueprint provides conversational AI capabilities that simulate human interactions that accommodate users’ speech styles and languages through Riva ASR, neural machine translation (NMT) along with intelligent interruption and barge-in support.

One of the key benefits of digital human AI agents is their ability to function as “always-on” resources for employees and customers alike. RAG-powered AI agents continuously learn from interactions and improve over time, providing more accurate responses and better user experiences.

For enterprises considering digital human interfaces, choosing the right avatar and rendering option depends on the use case and customization preferences.

Use Case: 3D avatars are ideal for highly immersive use cases like in physical stores, kiosks or primarily one-to-one interactions, while 2D avatars are effective for web or mobile conversational AI use cases.

Development and Customization Preferences: Teams with 3D and animation expertise can leverage their skillset to create an immersive and ultra-realistic avatar, while teams looking to iterate and customize quickly can benefit from the simplicity of 2D avatars.

Scaling Considerations: Scaling is an important consideration when evaluating avatars and corresponding rendering options. Stream throughput, especially for 3D avatars, is highly dependent on the choice and quality of the character asset used, the desired output resolution and the rendering option of choice (Omniverse Renderer or Unreal Engine) can play a critical role in determining per stream compute footprint.

NVIDIA Audio2Face-2D allows creation of lifelike 2D avatars from just a portrait image and voice input. Easy and simple configurations allow developers to quickly iterate and produce target avatars and animations for their digital human use cases. With real-time output and cloud-native deployment, 2D digital humans are ideal for interactive use cases and streaming avatars for interactive web-embedded solutions.

For example, enterprises looking to deploy AI agents across multiple devices and inserting digital humans into web- or mobile-first customer journeys, can benefit from the reduced hardware demands of 2D avatars.

3D photorealistic avatars provide an unmatched immersive experience for use cases demanding highly empathetic user engagement. NVIDIA Audio2Face-3D and Animation NIM microservices animate a 3D character by generating blendshapes along with subtle head and body animation to create an immersive, photorealistic avatar. The digital human AI Blueprint now supports two rendering options for 3D avatars, including Omniverse Renderer and Unreal Engine Renderer, providing developers the flexibility to integrate the rendering option of their choice.

To explore how digital humans can enhance your enterprise, visit the NVIDIA API catalog to learn about the different avatar options.

Getting started with digital avatars

For hands-on development with Audio2Face-2D and Unreal Engine NIM microservices, apply for ACE Early Access or dive into the digital human AI Blueprint technical blog to learn how you can add digital human interfaces to personalize chatbot applications.

1Gartner®, Hype Cycle for the Future of Work, 2024 by Tori Paulman, Emily Rose McRae, etc., July 2024

GARTNER is a registered trademark and service mark of Gartner, Inc. and/or its affiliates in the U.S. and internationally and is used herein with permission. All rights reserved.

By Trisha Tripathi/NVDIA

熱門頭條新聞

- Fantasy Strategy Game Songs of Silence — Available Now on PC and Consoles

- WUTHERING WAVES VERSION 1.4 AVAILABLE NOW

- JDM: Japanese Drift Masterrelease window set

- Expanding AI Agent Interface Options with 2D and 3D Digital Human Avatars

- Morkull Ragast’s Rage Physical Collector’s Edition Confirmed for December Launch!

- Eggy Party Wins “Best Pick Up & Play” Prize At Google Play’s Best of 2024 Awards

- The films of competition of 11th Duhok International Film Festival were introduced

- 11th Duhok International Film Festival to be held with Indian Cinema Focus